The Modular World

An article on the novel functionalities of Celestia and the future of the modular world

Preface

Back in 2019 when we (Maven11) invested in LazyLedger (now called Celestia) the word modular wasn’t popularized yet in relation to blockchain designs. Over the past year, it has been popularised by people like polynya, a ton of L2 teams, countless others and of course also the Celestia Labs team - who coined the term in their first LazyLedger blog post in relation to separating consensus and execution.

Because of this, we are excited to put forward an updated overview of our investment in Celestia. It will offer insights into the modular world we envision, the various layers and protocols in such an ecosystem and why we are so excited about the potential functionalities it offers.

Architecture

Currently, most functioning and public blockchains are all monolithic entities. By monolithic, we mean a chain that handles both data availability, settlement and execution all on its own. Now, there are some variations of monolithic chains, especially in regards to rollups on Ethereum and subnets on Avalanche that have modular components. However, those aren’t modular blockchains in the most genuine sense of the word.

Let’s define what we mean when we say ‘modular’ so that there are no misinterpretations. When we say modular we refer to the fact that the usually combined layers, are decoupled. So what does this mean? It means that one of the three components of the chain is decoupled, so either execution, consensus or data availability. This means that you can put the term modular on rollups, since they only handle execution. While Ethereum handles everything else, as a monolithic entity.

In the case of Celestia, we can put the term modular on it since it only handles data availability and consensus. While it delegates settlement and execution to other layers. These layers are also modular since they only handle part of the components themselves. This means that in the case of Ethereum we can’t call it a modular blockchain, since the outsourcing of components only happens on its current rollups, in regards to execution. Nonetheless, Ethereum is still able to handle execution on its own, while also allowing rollups to batch transactions off-chain. This means that in its current implementation, Ethereum remains a monolithic chain. Although, Ethereum still remains the ideal settlement layer while being the most decentralised and secure smart contract chain at the same time.

Now, you might say what about Polkadot or Avalanche? In Avalanche's case, it's not modular, but rather just split up networks that are all capable of handling all the components of a blockchain. This means that they don’t scale modularly, but rather scale by utilising other monolithic chains horizontally. Polkadot's parachains handle execution, similarly to rollups, while sending blocks to the relay chain for consensus and data availability. However, the relay chain still ensures the validity of transactions.

Over time as monolithic chains grow it causes immense amounts of congestion and inefficiencies. Being confined to using a single chain for all purposes is simply not feasible, if we want to onboard more people. Since it causes extremely high fees and delays for the end-user. This is exactly why we’ve seen more and more chains deciding to move towards splitting their chains up. We’ve all heard of the fabled Merge, which will move Ethereum to a Proof-of-Stake chain. However, they also plan on moving to sharding eventually. Sharding is when you split the blockchain horizontally into several pieces. These shards will purely handle data availability.

This together with rollups is how the Ethereum community plans on fixing its scalability issues. Now, are there other ways too? There are certainly — we’ve also seen Avalanche move towards a slight modular future with Subnets, however, as explained earlier we wouldn’t categorise it as complete modularity.

To better understand how each of the various 'modular’ architectures function, let's try to draw them out so that we can get a better overview of the differences.

Architecture Comparisons

First, let’s take a look at the biggest smart contract blockchain in existence, Ethereum. Let’s look at how their current architecture looks, and how it's going to look in the future when sharding is enabled.

Currently, Ethereum handles all the components of a blockchain. However, it also offsets some of the execution to L2 rollups that then batch transactions to be settled on Ethereum. In the future, with sharding, the architecture will look a bit like this:

This would turn Ethereum into a unified settlement layer while shards would handle data availability. This means that shards would just be DA environments for rollups to submit data to. On shards, validators only need to store data for the shard they’re validating, not the entire network. Sharding will eventually let you run Ethereum on light nodes, similar to Celestia.

For Avalanche, their main scaling proposition is through singular blockchains that can easily be created — their subnets. The Avalanche architecture looks a bit like this:

A subnet is a set of new validators that validate a blockchain. Each blockchain is validated by exactly one subnet. All Avalanche subnets handle both consensus, data availability and execution by themselves. Each subnet will also have its own gas token, as specified by the validators. An example of a subnet that is currently live is the DefiKingdoms subnet, which uses JEWEL as its gas token.

Before we move on to look at Celestia's architecture let's first look at Cosmos. From which Celestia borrows heavily and with which there will be heavy interaction through IBC since it’s also built with the Cosmos SDK and a version of Tendermint - Optimint. Cosmos architecture is quite different from other current architectures, since it enables dApps as the application of the blockchain itself, instead of providing a VM. This means that a sovereign Cosmos SDK chain just has to define the transaction types and state traditions that it needs while relying on Tendermint as its consensus engine. Cosmos chains split up the application part of blockchains and use ABCI to connect it to networking(p2p) and consensus. ABCI is the interface that connects the application part of the blockchain to the Tendermint state replication engine which provides the consensus and networking mechanisms. Its architecture is often propagated as such:

Now let’s take a look at how Celestia’s architecture will look, once the ecosystem starts to get built.

This is how an early ecosystem could look on Celestia. Celestia will operate as the shared consensus and data availability layer between all the various types of rollups operating within the modular stack. The settlement layers would exist to facilitate bridging and liquidity between the various rollups on top of it. While you would most likely also see sovereign rollups functioning independently, without settlement layers.

Now that we have established the different degrees of modularity, how they function and how they look, let’s take a look at some of the unique capabilities and functionalities that pure modular blockchains such as Celestia enable.

Shared Security

One of the beautiful things about monolithic blockchains is the fact that all users, applications and rollups that make use of it, receive security from the underlying layer. So how does this work in the setting of a modular stack?

It’s quite simple really — Celestia provides the rudimentary functionality that chains require to establish shared security — data availability. This is because every single layer that utilizes Celestia is required to dump all their transactions data to the data availability layer, to prove that the data is indeed available. This means that chains can connect, watch and interoperate with each other effortlessly. By always having the security of the underlying DA layer it makes it extremely easy to hard and soft fork too, which we will get into later.

Likewise, Celestia allows various types of experimental execution layers to be run simultaneously, even without relying on a settlement layer, while still having the advantage of a shared data availability layer. This means that the rate of iteration will become much faster since it would likely scale linearly with the number of users. Therefore our thesis is that, in time, this leads to compounding improvements on execution layers, as we’re not restrained by monolithic entities with centralised execution layers, since execution and data availability is decoupled. The permissionless nature of modularity allows for experimentation and gives developers the flexibility to choose.

Data Availability Sampling and Block Verification

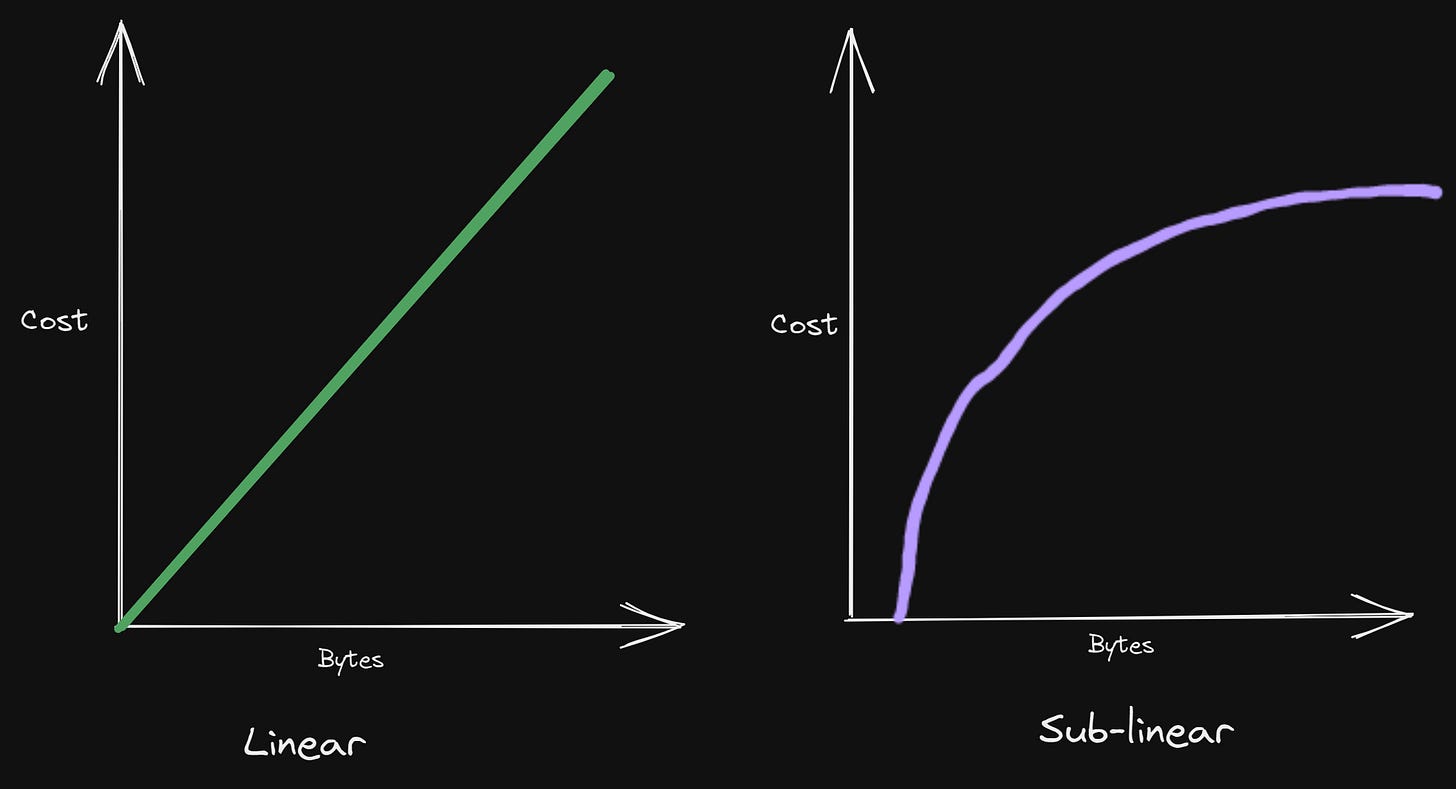

Celestia’s block verification works quite different from other current blockchains since blocks can be verified in sub-linear time. This means that throughput increases with a sub-linear growth in cost, compared to linear growth in cost. So how does this look on paper? Let’s take a look.

This is possible since Celestia’s light clients do not verify transactions, they only check that each block has consensus and that the block data is available to the network.

Celestia removes the need to check transaction validity since it only checks if blocks have consensus and data availability, as seen above.

Instead of downloading the entire block, Celestia light nodes just download small random samples of data from the block. If all the samples are available, then this serves as proof that the entire block is available. Basically, by sampling random data from a block, you can probabilistically verify that the block is indeed complete.

This means that Celestia reduces the problem of block verification to data availability verification, which we know how to do efficiently with sub-linear cost using data availability sampling.

DA proofs are when you require that blocks being sent, are to be erasure coded. This means that the original block data is now doubled in size, and the new data then gets encoded with redundant data. Celestia's erasure encoding expands the block sizes by 4x, in which 25% of the block is the original data, while 75% is the replicated data. Consequently requiring a misbehaving sequencer or similar to withhold more than 75% of the block’s data if it wanted to commit fraud.

Hence it allows light clients to check with a very high probability that all the data for a block has been published, by just downloading a very small piece of the block(DA sampling). Each round of sampling decreases the chance of data unavailability until it's certain that all the data is available. This is extremely efficient since instead of every single node downloading every single block, you instead just have many light nodes downloading small pieces of each block, but with the same guarantees of safety as before. This means that as long as there are enough nodes that are sampling for data availability, it is possible to increase throughput, as the number of sampling nodes increases. You may be familiar with this type of network in your daily life(DA proofs), even if you haven’t used blockchains, by using protocols such as BitTorrent.

Scalability

When we talk about scalability the first thought that comes to mind from most people is usually transactions per second. However, this should not be the actual discussion being had around scalability. When talking about scalability in a specialized DA layer it should rather be mb/s, instead of transactions per second which should be the major hurdle to overcome. Mb/s becomes an objective measure of the capacity of a chain, rather than tp's since transactions vary in size. Here Celestia stands quite well, since it unbloats the DA layer and utilizes data availability sampling to increase the amounts of mb/s the system can handle.

By that, we mean that the real limitation of how many transactions a blockchain is capable of processing is based on input and output. So, by decoupling data availability from the input and output process, which rollups will handle, Celestia will be able to produce much higher bytes per second than monolithic entities.

This all stems from the data availability problem. Which is the number of data in a proposed block that a sequencer or similar can verify is available while being limited by the data throughput of the underlying DA layer. Now with monolithic blockchains that utilise full nodes, the normal step to take to fix this would be to increase the hardware requirements of full nodes. However, if you do that, there will be fewer full nodes and decentralization of the network would falter with it.

Therefore by utilising the technology we touched upon in the block verification part previously, we can increase scaling without increasing node requirements by making full nodes equal light nodes with DA sampling. This in turn causes node growth to cause more throughput since DA sampling causes sublinear growth — since it scales with the number of light nodes being added. In monolithic design, a block size increase equally increases the cost of verifying the network, but on Celestia, this is not the case.

Although, Ethereum is also looking to fix some of its scalability problems with EIP-4844 which would enable a new transaction type — blob transactions. Which will contain a large amount of data that cannot be accessed by EVM execution, but still be able to be accessed by Ethereum. This is done because presently, rollups on Ethereum rely on a minuscule amount of available call data to exercise their transactions. Sharding will also help but is still quite far out, but when released should dedicate around 16MB of data space for rollups per block. However, it remains to be seen how competitive the battle for the blob transaction space will become. Although, once you solve one of the scalability dilemmas, another might pop up. So by moving towards modular layers, we can allow the various parts of the stack to specialise for the specific resource they utilise the most.

Forks

In most cases when a hard fork takes place on monolithic chains, you lose the security of the underlying layer, since the execution environments don’t share the same security. This means that usually hard forks are not feasible or wanted since it would mean the new fork won’t have the security of the data availability and consensus layer. It's the same idea when we say that you could submit a change to the code of a blockchain, but you’d have to convince everyone to agree with your change. Take Bitcoin for example. Bitcoin’s code is changeable quite easily, however, getting everyone to agree with a change is the hard part. In the case of you wanting to hard fork a monolithic blockchain, you would also need to fork the consensus layer, which means you lose the security of the original chain. The amount of security lost depends on the number of miners or validators that don't validate the new canonical chain. However, if all the validators upgrade to the same fork, then no security is lost. On modular blockchains, this is not true, since if you want to fork a settlement or execution layer you would still have the security of the underlying consensus layer. In this case, forks are viable since the execution environments all share the same security. Although, this isn't possible for rollups on a settlement layer, since the settlement layer acts as the source of trust for the added blocks.

The case for hard forks being unlimited and easily accomplished for execution environments, is that wild ideas can be tested and tried. As well as making it feasible to work on top of the work of others, without losing the base layer security. If you think about the ideas of a free market (and some might not agree on this) it can often create competing implementations that can give better outcomes.

Modular Stack

The modular stack is a concept unique to Celestia. It refers to the decoupling of all the various layers of a usual blockchain into separate layers. So when we say stack we refer to all the layers functioning together.

So what layers exist? There’s unmistakably the consensus and data availability layer Celestia, but there are also other layers. Here we refer to the settlement layer, which is a chain which rollups have a trust-minimized bridge with for unified liquidity and bridging between rollups. These settlement layers could be of various types. For example, you could have restricted settlement layers that only allow for simple bridging and resolution contracts for the execution rollups on top of it. However, you could also have settlement layers that have their own applications on them, as well as rollups. Although other types of rollups exist as well, that don’t rely on a settlement layer, but rather function on their own solely with Celestia — these are called sovereign rollups, and we’ll talk about those in the next chapter.

Now, it is also possible to have a stack where the execution layers don’t directly post block data to the settlement layer, but rather directly to Celestia instead. In that case, the execution layers would just post their block headers to the settlement layer, which would then check that all the data for a certain block was included in the DA layer. This is done through a contract on the settlement layer that receives a Merkle tree of transaction data from Celestia. This is what we call attestation of data.

Another huge advantage to the modular stack is its sovereignty. In the modular stack, governance can be compartmented to specific applications and layers and does not overlap with others. If there is an issue, governance can fix it without interfering with other applications in the cluster.

Sovereign Rollups

A sovereign rollup is a rollup that operates independently from any settlement layer. This means that it doesn’t rely on a settlement layer with smart contract capabilities where it would provide state updates and proofs, but rather function purely with a namespace on Celestia. Usually, rollups function within an ecosystem, like Ethereum, where it has a rollup smart contract (resolution contract). This rollup's smart contract also provides trust-minimized bridging between the settlement layer and rollup. However, on Ethereum, all the rollups compete for precious call data. This is why EIP-4844 is being worked on, which would provide a new transaction type— blob transactions. This would also increase the block size. However, even with blob transactions, there will most likely still be heavy competition for settlement.

Most monolithic blockchains are capable of handling smart contracts. On Ethereum for example there is an on-chain smart contract that handles state root, which is the Merkle root of the current state of the rollup. This contract constantly checks that the prior state root fits the rollup batches of its current root. If that is the case, then a new state root is created. However, on Celestia, this is not possible as Celestia won’t handle smart contracts.

Rather on Celestia, sovereign rollups post their data directly to Celestia. Here the data won’t be computed or settled, but rather just stored in block headers. A block header is what identifies a particular block on a blockchain, and each block is unique. Within this block header, a Merkle root exists which is made up of all the hashed transactions.

So how does it work? The rollup has its own peer-to-peer network from which both full and light nodes download the blocks. However, they also verify that all the rollup block data was sent and ordered on Celestia (hence the name data availability) through a Merkle tree — which we’ve seen examples of earlier. Thus, the canonical history of the chain is set by local nodes that verify that the transactions of the rollup are correct. The implication of this is that a sovereign rollup is required to post every transaction on the data availability layer, so that any node can follow the correct state. As a result, full nodes that function as watchers of the rollup’s namespace (think of a namespace as a rollup’s smart contract) can also provide security to light nodes. This is because, on Celestia, light nodes are almost equal to full nodes.

To elaborate on namespaces: On Celestia, Merkle trees are sorted by namespaces which enable any rollup on Celestia to only download data relevant to their chain and ignore the data for other rollups. Namespace Merkle trees (NMTs) enable rollup nodes to retrieve all rollup data they query without parsing the entire Celestia or rollup chain. Furthermore, they also allow validator nodes to prove that all data have been included in Celestia correctly.

So why are sovereign rollups a unique prospect? Because prior rollup implementations, such as on Ethereum are limited, since Ethereum nodes are required to store execution-related state as a result of being monolithic. However, in a modular design, we can have specialized nodes for various purposes, which should make it much cheaper to run the network. Thus the costs of running the network scales with the cost of light nodes rather than full nodes, since as we explained earlier - light nodes = full nodes.

Let’s take a look at how some of the rollup implementations could function as sovereign rollups. First, it is important to clarify how the various rollup proof systems function on Celestia.

Optimistic rollups depend on fraud proofs. The fraud proofs would be gossiped between clients peer-to-peer via full nodes and light nodes on the rollup side. We will look at an implementation of this further down. Sovereign rollups change the way fraud proofs are distributed. Instead of verifying them on a settlement layer contract, they're now distributed in the rollups peer-to-peer network and verified by local nodes. With Sovereign Optimistic Rollups on Celestia we can possibly also minimize the challenge period, which means we fix one of the major hurdles with current ORs, since they're currently very conservative with their dispute windows on Ethereum. This is possible since currently, all the fraud interactions happen on-chain in the highly competitive block space of Ethereum, which causes protracted finalization. However, on a sovereign rollup, any light node has full node security if it’s connected to an honest full node, and therefore fraud interactions should be much quicker.

ZK rollups depend on validity proofs (zksnarks for example). ZK rollups acting as sovereign rollups would function quite similarly to how current implementations work. However, instead of sending a ZK proof to a smart contract, it would be distributed in the peer-to-peer network on the rollup for nodes to verify. Sovereign ZK rollups much like ZK rollups on unified settlement layers allow for various execution runtimes to function on top of each other as sovereign chains, since their transactions aren't interpreted by Celestia. Here the runtimes on top of the ZK rollup could function in a variety of ways. There could be a privacy-preserving runtime, an application-specific runtime and much more. This is what is coined as Fractal Scaling.

Now that we have established the concept of sovereign rollups, and have an idea of how they will be implemented on Celestia, let’s take a look at how two different rollups’ architectures could look:

So why do they need Celestia? Optimistic rollups require DA so that fraud proofs can be detected and ZK rollups require DA so that the state of the rollup chain is known.

It’s also important to always be contrarian when you look at something. Because if not, you can often get blinded by your own conviction. In this section, I will try to explain some of the negatives that come with sovereign rollups.

Sovereign rollups are going to be heavily reliant on new ecosystems being built on top of them, similarly to the often touted L1 playbook. This means dApps etc. However, if the rollup has a VM implementation that already has a lot of development activity and dApps that are open source, then this becomes much easier to do. Despite this, liquidity is still the major issue to overcome. Liquidity will often be compartmentalized to the sovereign rollup and its runtimes. Thus the rollups would be heavily reliant on secure, trust-minimized bridging to other layers, such as other sovereign rollups or settlement layers. We’ll look at some possible implementations of this later on. Furthermore, the implementation of sovereign rollups is heavily dependent on infrastructure being built that can support its various functionalities.

Optimistic Rollup Implementation

In this section, we will try to explain how a possible Sovereign Optimistic rollup implementation could work. This part borrows heavily from the Light Clients for Lazy Blockchains research paper written by Ertem Nusret Tas, Dionysis Zindros, Lei Yang and Davis Tse.

One way to construct a unique way of providing fraud proofs for an OR is to have full and light nodes on the rollup to play a bisection game. A bisection game is played between two nodes, one that is a challenger and one that is a responder. The challenger would send a query to a responder through a third node acting as a verifier. The responder's reply to this query would happen through the same channel. After receiving the challenge, the verifier would forward the query to the responder, who would then produce a response that would be sent back to the verifier and the challenger. The verifier will consistently perform checks to make sure that there aren’t mismatches between the two, and that they aren’t malicious. The verifier acts to ensure that the responder isn’t sending the wrong Merkle trees, and the challenger acts to ensure that the responder is following the correct root. If the responder can defend himself, then the game carries on as usual. As a result of this bisection game, an honest challenger will always win, and an honest responder will always win.

Data Availability on Celestia, Settlement on X

It’s also possible to neither use a settlement layer connected purely to Celestia for bridging, nor function as a sovereign rollup. Since Celestia just provides the underlying DA layer with shared security, any settlement layer could be used as long as Celestia can send a Merkle root of available transaction data to a settlement layer contract. This means that any settlement layer could be used for rollups, if they so desired. So why would they do this? Many existing settlement layers, such as Ethereum, have an already existing and thriving ecosystem. Thus there’s already liquidity, and users to take advantage of. This is especially advantageous for rollups that don't want to rely on building an entire ecosystem from scratch. Now, this is not purely limited to Ethereum as a settlement layer. You could also make use of Mina as a ZK rollup for example. That means you could send your transactions data to Celestia, while sending state updates and zk-proofs to Mina. By doing so, you already have a settlement layer that’s validity proven by default.

If you’re a rollup operator and want to take advantage of liquidity and users that utilize other blockchains, then this type of solution is highly attractive to you. It’s also possible to sorta become a plug and play type of rollup operator. You could have different sequencers plugged into different settlement layers. For example, one ZK rollup sequencer could be connected to Mina and provide state updates and validity proofs. While another sequencer on a different zk-rollup could be connected to Ethereum for settlement through the Quantum Bridge (the fabled Celestiums). What they would all have in common, is that they would send all their transaction data to Celestia, and then Celestia would operate a smart contract or similar on the settlement layer where it would send a Merkle tree of available data (attestation).

Let’s take a look at how this could look architecturally with a ZK rollup as an example:

Value Accrual

The source of revenue for Celestia itself is going to be transaction fees from various rollups’ submitted transaction batches. Celestia’s transaction fees are going to be quite similar to how Ethereum currently operates with EIP-1559, so a burn style mechanism. This means that there will be a dynamic base fee that will be burned, as well as a “tip” for validators to push a certain transaction through quicker, these validators would also get value from the issuance of tokens after new blocks. However, this is from the perspective of Celestia’s validators, so what would it look like from a user’s perspective? Let’s first establish how the various fees would look depending on what layers you’re using, and then we can conclude how the user experience would look.

The fee structure for execution rollups will primarily be the operating cost + the DA publishing cost. There will likely be an overhead cost too, so that the rollup makes a profit. That means for the user you would likely pay one fee that encompasses those 3 + a congestion fee — which will likely be much lower as a result of less congestion.

The source of revenue for the settlement layer is the settlement contract fee that the rollup would pay to be able to settle on it. Furthermore, there will also be enshrined trust-minimized bridging between rollups through the settlement layer, so it would also be able to charge bridging fees.

Then what about sovereign rollups that function without a settlement layer? On sovereign rollups, users would have to pay a gas fee to access computation on the rollup. The rollup would set a fee, most likely decided by governance, and then you would likely also have a congestion fee that would have to be paid for as well. These fees on the rollup would cover the fee of publishing data to Celestia, and a small overhead for the rollups validators. You would forego the settlement fee, which will likely produce extremely low fees for the end-user.

So to conclude, we can create a fee structure of how the various fees will look for the end-user. The end-user of a modular stack would likely get 3 constant fees, with 4 being possible. These are the DA publication fee, the settlement contract fee and the rollup execution fee. The fourth possible fee would be a congestion fee during heavy loads. The user only pays a single fee on the execution layer, this fee would encompass the costs of all the layers in the modular stack. So let’s take a look at what the fee structure would look like from the users' perspective:

So what does this mean for the future?

Well, if Celestia proves to be a cheaper and faster data-availability layer for rollups to use, while still providing decentralized and shared security, then you could see rollups increasingly use it for data availability. If we consider how much rollups are currently paying to use Ethereum for security, then the rollups on Celestia would pay much less. However, there are fixes coming to fix the congestion issues on Ethereum, primarily blob transactions, staking and sharding.

Then what about MEV? Rollups currently utilise sequencers to collect and order users' transactions in a mempool before they get executed and posted to the DA layer. This is an issue in regards to MEV, since the sequencer is primarily centralized in current implementations, and is therefore not censorship-resistant. The current solution to this is to decentralize the sequencers, which a lot of current rollups plan to do, though this brings with it its own set of issues. Another way to solve this in some form would be to separate validators and the ordering of transaction lists (take a look at Vitalik’s paper if you’re interested).

To conclude, the layers of the modular stack receive revenue through transaction value. Users obtain value from transacting on a layer and are thus familiarised with paying fees. The value thus refers to the value a user receives from having their transactions included on a layer.

Bridging

As we’ve discussed previously, if a rollup has a settlement layer, it will have a trust-minimized bridge to other rollups via the settlement layer. But what happens if the rollup is sovereign, or if it wants to bridge to another cluster? Let’s look at cross-rollup communication.

In the case of two sovereign rollups that want to communicate, they can actually make use of light client technology, much like how IBC functions. The light clients would receive block headers from the two rollups and with it, the proofs that the rollups use, via a p2p network. This could both work via a lock and mint mechanism, such as with IBC, or via the validators through a relayer. With chains built using the Cosmos SDK and those who leverage Tendermint or Optimint bridging becomes much more seamless, as you can take complete advantage of IBC with ICS. However, this requires the two chains to include the state machines of each other and have the validators of the bridging chains write off on transactions. Other ways to bridge can exist as well. We could, for example, imagine a third chain that functions with some sort of light clients. On which the two chains that want to bridge can stream their block headers, which would then function as a settlement layer for the two. Or you could rely on a Cosmos chain to act as an “intercluster rollup hub”, where the validators of the chain could operate the bridge by following the conditions of the rollups. There also exists a wide variety of bridging as-a-service chains, such as Axelar, and many others.

However, by far the easiest way to facilitate bridging, is for execution rollups to use the same settlement layer since they would have trust-minimized bridge contracts on it.

The reason why bridges between layers are so important is that it allows for unified liquidity. Secondly, by allowing protocols and layers to be composable with one another through sharing state, we unlock new levels of interoperability. State sharing refers to the ability of one chain to make calls to another chain. Here is of special interest, particularly the abilities of interchain accounts with ICS-27.

So, therefore, we can conclude that light clients are crucial in interoperability standards like IBC. Celestia light clients’ will as a result enable more secure interoperability between chains in the various clusters. In regards to Celestia’s connection to IBC, then they’re planning on using governance to whitelist certain chain’s connections to Celestia, to limit state bloat.

End-User Verification

While all the various monolithic and modular design approaches of the last few years are innovative, and the amount of talent building it, is astonishing. There is one fundamental question underlying various trade-offs that has been around in our space for quite some time. We believe it centres around end-user verification and the need thereof.

You can argue endlessly, and CT will, about the various trade-offs different designs make. But in the end, it perhaps comes down to the question - if having the possibility of end-user verification matters. A lot of design trade-offs (e.g. blocksize) centre around the ease of running a full node, and DAS enables light clients to be “first-class citizens”, comparable to full nodes.

The underlying assumption in thinking that, is that users will care about being first-class citizens at all. The fact that users can easily verify the chain by running a light client/full node does not mean they will, or that they will value the ability to do so.

The argument in favour of this is rather straightforward. If users do not care about verification you might as well run a centralized database. It will always be more efficient as decentralisation often comes at the expense of efficiency. Hence the reason why we are building crypto protocols at all, is that end users are able to verify the compute.

The argument against, is that as long as the network is sufficiently decentralized, end-user verification in itself does not matter. Users won’t care about it, as long as UX is good. There is no clear answer to how important end-user verification is for now. However, we think end-users being able to verify the chain is a worthwhile goal chasing and the reason why many are building in this space.

The Future of the Modular Stack

This section will serve as a way to envision what the modular stack built on top of Celestia could look like in the future. We will look at an architectural overview of how we view the modular stack, and what kind of layers we might see.

Underneath is a diagram of many of the possible layers that can function within a modular stack. They all have one thing in common, they're all using Celestia for data availability. We will likely see various sovereign rollups, both Optimistic and ZK rollups, which will function without a settlement layer. We're also likely to see rollups utilise Cevmos as a settlement layer, alongside various app chains. There is also the possibility that we might see other types of settlement layers. These settlement layers could be restricted, which means that they would either have pre-set contracts just for bridging and rollups, or rely on governance to whitelist contracts.

On the right side of the diagram are other non-native settlement chains which could also have rollups that utilise them for liquidity and settlement while relying on Celestia to provide an attestation of transaction data to the settlement layer.

All of these clusters would all be connected through various bridging services, both new and old.

What you don't see is also all of the infrastructures that will be built to provide easy access to the various functionalities of Celestia, such as RPC endpoints, APIs and much more.

Endnote

If you’re looking to build on top of Celestia, whether that would be in regards to rollups, or other types of infrastructure, then do reach out. We would be happy to talk to you!