The Modular Stack

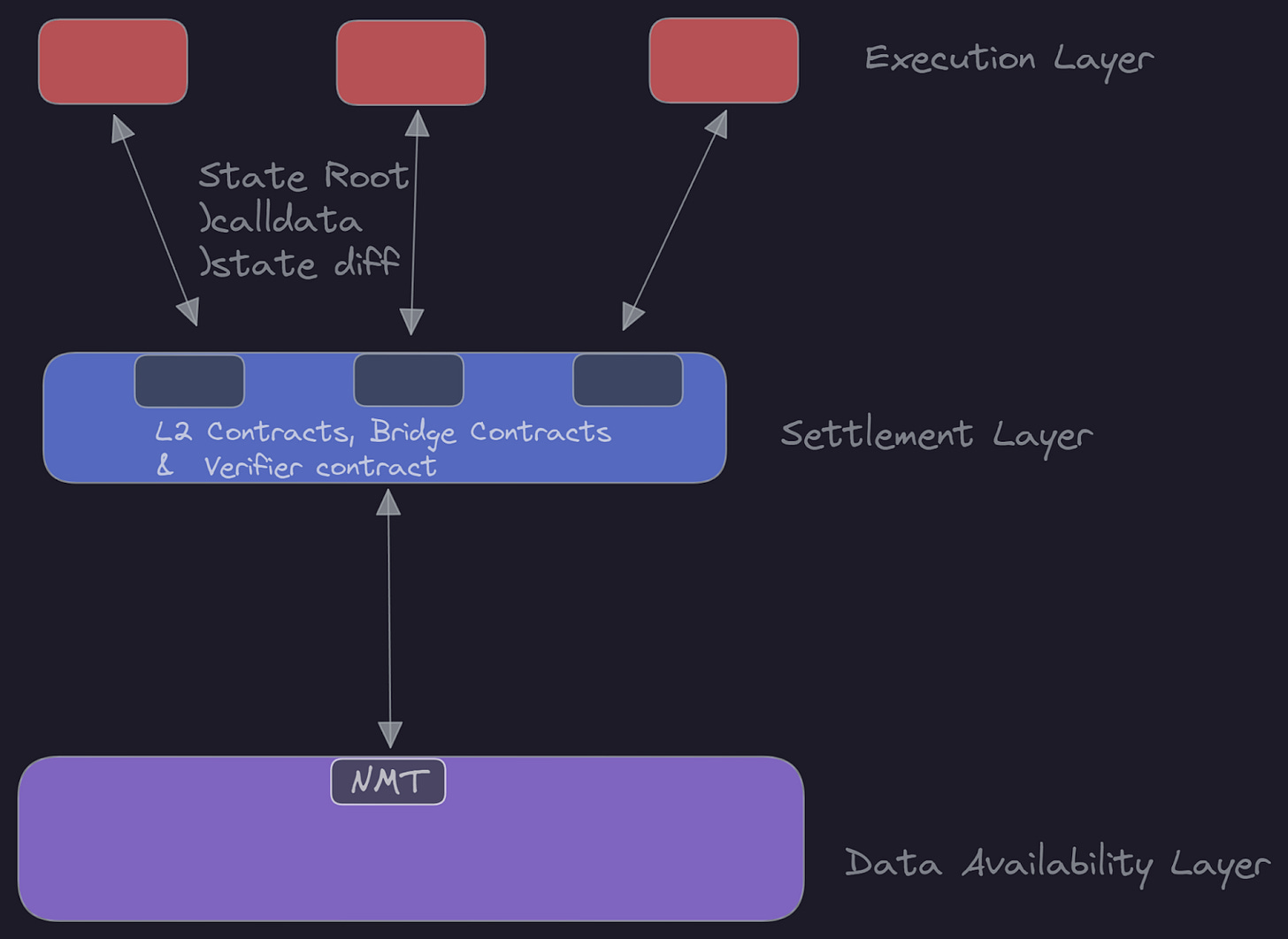

If you’ve read any of our prior articles, or if you’re familiar with the way modular blockchains work, you’d know that they work quite a bit differently than their monolithic counterparts. With modular blockchains, you split off parts of the aspects of a blockchain that monolithic blockchains usually all possess. These are namely—Execution (Transactions/Smart Contract Interactions), Settlement (Dispute Resolution), Consensus and Data Availability. Now in a modular stack, you might have a data availability (and consensus) layer, a settlement (liquidity and dispute resolution) layer, and execution layers (hence referred to as rollup, however, keep in mind that settlement layers are rollups as well, in the modular stack). We’ve already looked at how the MEV supply chain looks in a monolithic setup, as well as in a semi-modular setup (with off-chain execution rollups), such as on Ethereum. When you start to split up more of the layers of a blockchain, it changes even further. If you need a refresher on the Modular Stack, we recommend reading up on our prior article – The Modular World.

This is an example of how a supply chain could look in a modular setup, regarding MEV. Underneath, we’ll take a bird's-eye view of how this looks in the entire stack:

In a modular stack, the sequencers essentially act as the builders in a monolithic setup. However, the sequencer also proposes blocks to the underlying layers to which they are connected. As such, we can both call sequencers builders and proposers. This is absent from any Proposer-Builder-Seperation (PBS) implementation, which would likely be implemented by third parties (or rollups themselves), to minimize PGA auction congestion on the execution layer.

The validators on the data availability layer are purely proposers, exactly like their counterparts on Ethereum(w/ MEV-Boost). Opposite, the sequencers essentially build blocks from bundles of transactions from users and searchers. They then send/propose these to the DA or settlement layer (depending on smart contract rollup or not), where the validators will include transactions/blocks based on the fee being paid (and into their respective Namespace). The Celestia validators are unable to reorder any transactions, just like their counterparts, as they would get slashed.

Essentially, the sequencers, now builders, are able to put together the highest value block—which could contain cross-domain MEV opportunities, for example, to be included in the DA level block as soon as possible by the aforementioned validators. When a block is finalized on the DA layer, we essentially get complete finality on the rollup (Depending on rollup nodes having settled state, or dispute periods—although all data is now available). This opens up the opportunity for some interesting, and still somewhat theoretical, DA layer MEV interactions, which we’ll cover later on in the article. Before we start digging into cross-domain, bridging and DA layer MEV we need to understand how the modular stack functions in regard to liveness, finality, and incentivization which all affect how MEV is extracted and where. Alongside what off-chain actors inhabit the ecosystem.

Liveness

When talking about modular ecosystems, liveness becomes critical. This is because as you add more components to a stack, they all rely on each other and their liveness becomes even more critical. For instance, you need liveness between nodes on the various layers, but also between them and the DA layer. It is therefore essential that we also take a look at liveness in a modular setup. If you require a primer on liveness, then our previous article covers decentralized sequencers and methods for them to achieve liveness as well. In a modular stack, sequencers on specific rollups need to give liveness guarantees, but essentially derive some of their security from the layer below. In some cases, especially for app-specific rollups on top of a shared settlement layer, external sequencing is also a possibility. This would work in the sense that the rollup contract on the underlying layer (that has smart contracts) would be used to order transactions. In this setup, the users of the app-chain essentially just need to trust the underlying L1 for liveness—instead of having to trust another leadership election system (and it’s sequencer network). However, what this means is that the rollups in this setup would still be limited by the data sequencing abilities of the settlement layer—very much the case of rollups on Ethereum today.

An excellent way to illustrate how liveness works is by looking at the CAP Principle—Tim Roughgarden especially has an excellent explanation (alongside many other great lectures). CAP stands for three informal properties that you are required to possess for a distributed system to work as intended (regarding uptime and security).

C: Stands for consistency, basically that the UX should be identical from user to user, and it should feel like you’re interacting with a single database every time you use the system.A: Stands for availability, which is what we also refer to as liveness. The fact that all messages are handled by nodes in the system, and that they are reflected in future blocks/queries. All commands need to be carried out.P: Stands for partition tolerance (or censorship resistance), which means that the system needs to uphold consistency and availability even in the event of an attack, or partition of network nodes. However, what the principle states is that no distributed system is able to uphold all of these properties. Here, C (consistency) is especially hard to achieve, while retaining all the other properties. To gain consistency, you usually have to give up on availability. However, availability (liveness) is a core property of a distributed system, so we can’t give up on that. This is the trade-off that most singular databases in web2 make, since they want consistency over anything.

Various consensus mechanisms make different tradeoffs depending on their setup when it comes to the CAP principle. For example, if Tendermint gets stake weight attacked, it might go into an asynchronous phase of a partially synchronous model (keeping consistency). The other side of the coin here is that the longest-chain consensus (non-fast-finality) gives up on consistency in the event of attacks.

What this basically shows is that you need to pick two out of the three, when your network is under attack. This means you either keep consistency or liveness.

So, why are we mentioning all of this? Well, it is crucial for the sequencer networks of rollups in a modular stack to achieve part of the CAP theorem. Otherwise, the MEV will be entirely taken by the team controlling that singular sequencer (unless other public goods setups are in effect, but there’s still a trust assumption in that case, the centralized sequencer and the team behind it). It is also to show that the sequencer set needs backstops in the case of liveness failure, to not lose consistency (or safety). One of the options that could be utilized to decentralize the sequencer set, is by using a stake weight leadership election algorithm. However, one of the issues that you might run into with requiring other validators (sequencers in this case) to sign off on blocks, is that of censorship. You already inherit your liveness properties from the underlying layer. In this case, optimizing for liveness, over post-attack safety (as is the case with Tendermint for example), might be the better option. Essentially, the network’s leadership election should only pick a leader that then proposes a block, instead of requiring other sequencers to validate the same block. We will go more in-depth on other solutions towards censorship resistance in the incentivization section.

One extra unique thing about running on a separate DA layer is that you can’t do a double spend, as all transaction data is published—unless you perform data withholding attacks; however, that should lead to slashing depending on the consensus algorithms used. In the case of similar DA layer attacks from validators, the consensus layer will also slash these. Moreover, in the case of data withholding, you can retrieve the data from the namespace (or similar).

The way sequencers of rollups maintain liveness outside the rollup system is through the underlying layer it is connected to, whether that is a settlement or DA/Consensus layer (however the settlement layer also retains liveness from the DA layer, so essentially the DA layer is what contingencies are built on top of). They are sending received transaction information to the underlying layer, which then gets sorted (in the case of Celestia) into their namespace.

The resulting state roots of the transactions are settled either on the rollup’s own nodes or on the settlement layer in use. To uphold safety guarantees, the entire full transaction data has to be stored (or made available) on-chain. Although, we don’t need to execute it (since we do that off-chain). This is what provides us with data availability, which allows rollup nodes to see that all needed data associated with the rollup was published – to the underlying layer. This is where liveness and security are inherited from.

In the case of ZK rollups, it relies on the censorship resistance of the underlying layer for its liveness, not security since you’re not required to monitor transaction validity (as it is ensured by the ZKP). However, regarding consistency, there are some variables (such as a trusted setup). Furthermore, as we pointed out in the last article, with ZKPs complex computation (and the time/cost it takes to do so) it means that sometimes batches can fall behind. This means that while some batches/blocks have finality on the execution layer, it might still take some time before they receive non-pending finality on the L1, which is when the recursively proven ZKP batch is verified (either via a verification smart contract, or from rollup nodes in a sovereign setting). The reason for this is both time/cost. The final cost of verifying transactions is cheaper if you verify a large amount at the same time, instead of more often. That means that even though you technically can lower costs with ZKRs compared to ORs, there are still some hurdles to how mature the rollups transaction activity is, before it reaches an equilibrium of cost and activity. This is bound to get lower and lower, as rollups mature and hardware acceleration tech, proving networks, schemes, and recursion increases in efficiency.

Finality

Semantics (or subjective determination from the perspective of who the observer is). Does the trust-minimised two-way bridge decide what finality is? Well, that’s the way it would seem if you look at withdrawal times for rollups currently. This causes 3rd parties to pop up and take on inventory risk, providing instant withdrawals for end-users. We do think it’s relevant that we look at the difference between stages of finality, especially on the rollup side (particularly sovereign ones), so although much of this will be semantics – remember that semantics are important.

Finality in rollups refers to the assurance that a particular transaction or set of transactions has been irreversibly committed to the blockchain(s). In rollups on top of Ethereum, this assurance is achieved through a combination of several mechanisms.

In optimistic rollups, finality works differently compared to zk-rollups. Optimistic rollups primarily submit a compressed batch of transactions that include headers (showing what sequence of context is included), context (amount of txs, block info, time etc) and transaction data that commit to the rollups state transition function (which is then posted to the canonical chain). The rollup’s L1 smart contract then assumes that the transaction is valid and executes it. If the transaction is indeed valid, everything proceeds as normal. However, if the transaction is invalid, the rollup's canonical chain can be challenged. The challenge process involves submitting fraud proofs, which proves that the transaction(s) was invalid. If a valid fraud proof is submitted, the smart contract (and rollup nodes) will roll back the invalid transaction and revert any state changes that it caused in the canonical transaction chain. This means that optimistic rollups do not provide instant finality (despite all data being available) – instead, they have a challenge period during which transactions can be disputed (which is what the canonical bridge’s withdrawal period is based on). The length of the challenge period varies depending on the specific optimistic rollup implementation. However, the arbitrary period is currently set to 7 days, because of the valuable blockspace of the Ethereum network. If you want a flow diagram to help you understand Optimistic rollups (and how they work currently), I highly recommend L2Beats’ (click on the specific rollup you’re interested in)

The other is the use of zk-proofs to validate and bundle multiple transactions together into a single proof. These proofs are generated by the rollup's prover(s) after they have received the execution trace that attests to the validity of a certain rollups block batch. This gets submitted to Ethereum for verification by the smart contract governing the rollup (alongside either inputs or outputs as calldata, depending on the implementaion). Once the smart contract has verified the proof, it posts the relevant data, effectively committing the transactions to the blockchain. The on-chain mechanism is the use of Ethereum's consensus mechanism, which provides a final finality guarantee, which is enforced typically through “waiting” through two epochs of votes by a supermajority of the Ethereum validator set (different to deterministic consensus protocols, Ethereum is probabilistic). Once a validity proof has been verified on-chain, on the L1 it is considered final and irreversible, providing a high level of assurance that the transactions included in the block are also final.

What should be noted is that the above is from the perspective of the validating (canonical) bridge to the rollup. If you’re purely a L2 user, then finality is going to feel much faster, and your transaction is essentially final when it is Pending_On_L1 (for Optimistic Rollups) or Finality_On_L1 (for ZK Rollups) (see underneath).

One mental model for the finality of rollups is putting it into 4 different stages, depending on the rollup. These are:

Pending_On_L2: Soft commitment given by the rollup’s sequencer(s) that the users’ transactions will eventually be committed and finalized on the underlying layer, of which it derives security.Finality_On_L2: The sequencer(s) has committed to the state transition function of the rollup, and the block(s) have been added to the canonical chain of the rollupPending_On_L1: Transaction input or output/state transition function has been posted to the L1, but the dispute period or validity proof has yet to be posted, or period ended – this requires two successive epochs to have passed for Ethereum. This is the point where most Optimistic rollups say finality has been reached, however according to the canonical bridge – there’s still, at this point, an arbitrary 7-day challenge period.Finality_On_L1: The dispute period has ended for Optimistic rollups, or the validity proof has been posted and verified, as well as confirmed in two successive epochs with a supermajority.

The soft confirmations given by a single, centralized sequencer are possible because of the (trusted) promise from the sequencer that it will not reorder transactions, nor touch MEV (Also, the sequencer can provide instant transaction confirmations by requiring a security bond that will be forfeited if the promised block inclusion is not met, ensuring economic finality). If we move beyond centralized sequencers to decentralization of the sequencer set, much of this MEV is likely to be extracted by the chosen leader for a certain rollup block, or from a different mechanism (as we covered in part 1). There are and should be mechanisms in place to avoid reordering of transactions by a proposer, that they received from a builder (or FCFS), which should be punishable by the slashing of the sequencer’s bond. This bond should be high enough to avoid sequencers doing so, but also not high enough to gate keep the ability to become a part of a permissionless sequencer set – a fine line to walk. This is why the ability to force transactions through, to either the underlying smart contract of the rollup, or the DA layer where transactions data is posted – is incredibly important.

For sovereign rollups, finality works slightly differently, since we don’t have to post proofs and calldata (tx data) to a congested L1. A recent post by Cem Ozer and Preston Evans goes into detail into exactly how this is possible with sovereign ZK Rollups. What's critical to note in this specific setup, especially in regard to MEV extraction, is that this design inherits the transaction (and proof) ordering from the base layer. Which means that the proposer on the base layer becomes the leader. This is similar to the "Based" design, which we'll be referring to several times in this article when referring to inheriting the ordering of the base layer (essentially outsourcing sequencing to the L1). When this is the setup, you're also now dependent on the block time (as a result of outsourcing sequencing and ordering) of the base layer.

If a rollup posts state-diffs (such as Starknet and ZKSync), even running a full node won't help reduce latency (since the full transaction data isn’t available). Most zk-rollups today use centralized sequencers to give soft finality guarantees (a commitment to the end-user that eventually the transaction data and proof will eventually make its way to the L1). But to make decentralized zk-rollups competitive on latency, we need to reduce latency for light clients. It is possible to do this without increasing proof verification costs by creating proofs in real-time (timestamps) and using recursion to aggregate them into a batch proof later (such as on Bonsai Network). As long as you distribute incremental proofs right away via the p2p network (on the rollup side) prior to the on-chain batch proofs, light clients will experience fast soft finality. However, the fast finality guarantees that the light client is given is only as fast as the block time of the underlying DA layer (since the Pure Fork Choice Rule is inherited from it).

The slower batch proofs are still posted on-chain (such as with existing ZK Rollups), so there's no extra cost for on-chain verification, beyond the on-chain verification of the batch proof every DA layer block.

This provides a trust-minimized bridge with the L1, where the bridge is no longer the source of truth for light clients. This is only possible if you’re bridging between protocols sharing the same DA layer, or operating in the same cluster, otherwise you're introducing additional trust assumptions on the rollup side.

In today's rollups, the L1 smart contract enforces the fork-choice rule (the rule deciding the canonical chain, this is with Tendermint chains ⅔ stake weight of the valset, or with Ethereum with Casper FFG + LMD GHOST) by verifying the zk-proof and checking that it builds on the previous proof and processes all relevant “forced transactions” (through the L1). Without a smart contract, it is still possible to enforce this rule by requiring proofs to be posted as calldata to the L1 and adding a statement to the zk-proof that it builds on the most recent valid proof (the ordering of these proofs on the base layer decides the canonical chain). This allows you to prove the fork-choice rule directly, instead of enforcing it client-side. On a DA layer like Celestia, this is easy to do, since you can look through the Namespace Merkle Tree(NMT) for transactions belonging to a specific rollup. If you want to read more about data commitments, like NMTs, read our article on data commitments here.

In terms of models to gain security guarantees on finality, Josh Bowen of Astria puts it well, and I’m going to quote him here.

“Proof of Stake committees, which come to consensus over a given state root and create an aggregated signature. (Committee with stake behind it)

Succinct zero knowledge proof of a given state transition. (Validity Proof)

Signatures from a single actor attesting to a given state root backed by a bond which can be slashed if the state root is proven to be invalid via a challenge mechanism. (Fraud Proof)”

State of Rollup MEV

In regard to the current state of rollup MEV it makes sense to look at ecosystems with a lot of activity (and hence extractable MEV). For instance, Arbitrum previously chose to ignore MEV altogether by using a first-come, first-served mechanism, but this places higher hardware requirements on the nodes and reduces the cost of DDoS for searchers, who will spam the sequencer to get included in blocks. However, the larger a window is, the larger amount of MEV is likely to be extracted. This is likely leading to less centralization in regard to MEV actors, though. While if you were to lower the window, there would likely be less MEV, but high centralization in regard to who extracts it (based on geographical adjacency).

This is currently being addressed by the Arbitrum team, and its community, with much drama around whether they should implement a PoW type algorithm (calculating the lowest nonce) to be included in rollup blocks. If you’re interested in how the Arbitrum team wants to implement this, we highly recommend you read this forum post, which goes into detail. However, by adding considerable cost to the extraction, the profitability of such goes down the drain. This means that you’re essentially adding a race-to-the-bottom for searchers, who will now have to compete on PoW calculations and spend money on upkeep of additional hardware. This is arguably not a particularly solid implementation, since if there’s still profit to be made, searchers will commit to make it. As we’ve argued previously, we would much rather see MEV go into the security budget of a decentralized network (which would also mean going to the token holders), or through public goods funding (but then we add an entirely different discussion into what accounts for public good).

One important part of what rollups enable is the possibility of separation of transaction ordering and execution, which will go a long way in alleviating some of the MEV issues that currently exist in monolithic blockchains and their SBV mechanisms (currently off-chain via MEV-Boost). Although, what happens currently is just redirecting MEV profits from validators to rollup sequencers. Which, if you think in terms of the security budget of the underlying layer, is strange, since the validators now only earn a DA fee. I would almost propose that in a modular world, some of this MEV is directed down to the validators, and their security budget, which is where the rollup derives much of its security from. This could come in the form of a % base fee of the extracted MEV, that goes to the DA/Consensus layer – call it public goods.

However, one important thing to take into account when talking about a competitive and decentralized rollup MEV market is as Vitalik put it back in 2020: “a competitive MEV environment has other negative externalities, e.g. it bloats the chain with a high rate of failed transactions” which in turn leads to less decentralization due to higher resource requirements. All the while, the execution layer (rollup) derives security from the base layer, but doesn't provide it with a lot of the value extracted apart from a base (or priority) da fee. This is, of course, dependent on what sequencing is used, its ordering and the separation of builder/proposer.

Incentivization

In the ever-evolving landscape of decentralized networks, the lines between economics and ideology blur, and the true cost of progress is obscured by the promise of profit. Yet, as those networks grow and thrive, those who bear the burden of payment must navigate the perilous waters of inflation and incentive. MEV is the lifeblood that flows through these networks, driving the participants forward in their pursuit of economic gain, even as the ideals of decentralization hang in the balance.

As we laid out in the previous article, someone at the end of the day needs to bear the economic burden of paying those that provide resources to a decentralized network. This is especially true in the beginning years of a network, as it aims to grow and mature. For mature and active chains, like Ethereum, this inflation is able to be lowered considerably. Here MEV plays a huge part in incentivizing the network participants, which makes it worth it, economically, to run certain activities. Obviously there’s also the ideological idea of decentralized networks, but at the end of the day, most of the actors and participants are participating because it makes sense from an economic point of view.

And while we’ve generally, at least in our past article, made the case for MEV rewards to be used in the incentivization of protocol security (via redistribution to the validators securing the network) – there are also other views on possible solutions. These include both using privacy preserving solutions to minimize MEV as much as possible, such as running parts of the MEV supply chain on SGX (TEE) hardware to store encrypted blocks and validate them permissionlessly without giving away vital information. However, the difficulty of obtaining TEE units (although this is getting a lot easier is an entire different story, which might also lead to centralization towards those with access to hardware. Furthermore, there’s been considerable discussion around the actual safety of TEE (a lot of drama here in the past year). Do you want to trust hardware, or do you want to trust cryptography? Cryptography is likely to have more performance overhead, while (if implemented correctly) higher security.

Other ideas include things such as time lock encryption, threshold encryption and so on. Although what’s important to note, is that even with current implementations of these solutions, MEV still slips through the cracks – whether that’s benign MEV (which is vital for the running of the on-chain financial systems), or non-benign MEV that’s harming the end-users. You essentially either allow arbitrageurs to give users fair prices at the expense of liquidity providers, or you compensate LPs via inflation, hurting the token holders (the current Osmosis strategy). Now, if that is happening on an application-specific chain, where you are also paying for security via your native token – you’re essentially double inflating your token holders.

However, on the flip side, to get people to use the product (which will eventually provide the security budget from MEV and fees), you need to first mature the rollup or application to the point where it can support itself without much inflation (such as with Ethereum). That means you’re essentially forced to incentivize usage and liquidity providers, via issuance, as a starting point. You need the inflationary token to push the security budget pre-matureness of utilization of said application(s). A way to limit some of this pain is to utilize a EIP1559 (fixed-per-block fee that is burned) style token burning mechanism, which will limit the amount of pain taken by issuance of tokens, as the network grows in usage.

With our thoughts surrounding incentivization out of the way, let’s look at it from another perspective – the reverse way to think of incentivization, is to think in terms of disincentivisation.

There have been some thoughts and implementation research into how to eventually move to leaderless MEV capture that gets redistributed to the end-users. Duality especially has been digging into this, with their announcement of Multiplicity. It is essentially based on the belief that blockchains with leadership mechanics are unfair for end-users, since it allows for much cheaper censorship. So to improve this, they came up with the idea of multiple concurrent block proposers (named MCBP). They essentially want several sequencers/nodes that contribute transactions for each block. What multiplicity provides is essentially a gadget for non-leader nodes (or sequencers) to include additional transactions into a block. The way this works is sorta similar to some ideas of first-come-first-serve, that we covered in part 1 of this series. Which is leaderless. It is based on the idea that for a leader to construct a valid block, it must include at least ⅔ (by stake weight) of the nodes’ transaction bundles.

That means you won’t be able to construct a valid block that omits transactions that are included in a bundle of >⅓ stake weight. This means a quorum has to be reached between sequencers/nodes with regard to the bundles of transactions coming from various sequencers. The way this works is that each sequencer in the network constructs their own bundle (ala first-come-first-serve) of transactions, which they sign and send to the leader. Afterwards, you go to the concept we just presented, which is that the ⅔ stake weight of signed transactions must be included in a block. What this means is that to censor, you must bribe many more validators, which increases the security budget of the rollup.

Another way to think of incentives is to look at the way most stake works in Proof-of-Stake Ethereum. Most stake in PoS Ethereum is being delegated to staking pools, who further delegate it to trusted (Lido) or bonded (Rocket Pool, Swell) node operators (infrastructure companies). But these pools could suffer from a risk called MEV hiding, where node operators are incentivized to hide the real value they make from a block and keep a larger fee for themselves (in the case of private order flow). An open market for block building solves this problem because builders need to maximize their public bids in order to be competitive (such as MEV-boost). As long as validators sell their blockspace to the highest bidder, there is very high confidence they are not engaging in MEV hiding on the side. This is also an issue that could become prevalent in rollups (specifically centralized ones), even when the sequencer sets are decentralized. It is therefore vital that there exists an open and transparent market for the MEV supply chain.

Off-chain Actors

Transaction Originators

Within the MEV supply chain, whether viewing it through a monolithic or modular lens, the input always comes from the transaction originator. The vast majority of order flow originates from retail users, for whom the predominant point of interaction with the chain is their wallet. These wallets are then the de facto aggregators of retail-originated order flow. An important extension of the ‘wallet’ as the typical example of the transaction originator comes by realizing that dApps are also significant order flow originators. While these originators so far haven’t been afraid to squeeze their users to juice up their margins (example being the near 1% ‘service fee’ charged on Metamask’s in-app swap functionality), there’s a much larger opportunity for them to cement themselves into the MEV supply chain.

Consider the parallel of MEV to Payment For Order Flow (PFOF) that we know from traditional finance. A zero-commission broker like Robinhood aggregates their users’ transactions and sells access to this order flow to market makers, who can then ‘earn the spread’ between these customer orders and the NBBO (a regulation that brokers must execute trades at the best nationally available ask/bid price). Market makers are happy to pay for this order flow because the general estimation of a Robinhood order is that these orders are generally non-toxic, i.e. the retail trader likely doesn’t have any information asymmetry over the market maker and thus there likely is room to make profit. While of course this TradFi analogy only partially applies to the types of MEV seen in blockchains, the link in the chain that clearly can be analogous to ‘our’ MEV is the PFOF opportunity.

Any entity in the supply chain that can capture significant order flow has the control to sell access to that currently are easily the de facto actors that are able to capture this order flow. A way to monetize wallets and/or return user-originated-MEV-value to users would be for these wallets to sell some preferred private relay access (think: all Metamask swaps being routed to a single market maker and/or block builder that is able to execute transactions with some additional no-frontrunning/sandwiching guarantees). Naturally, this type of (off-chain agreed upon) exclusive order flow access can have a centralizing effect: the preferred recipient of the order flow will generally be able to build more profitable blocks, allowing them to return more value to the originator of the flow and leading to a positive reinforcing feedback loop that’ll serve as an incentive to increase their exclusive flow access.

With the majority of order flow originating from wallets, we could see the wallets becoming a sort of kingmaker by controlling where a very significant portion of order flow goes and how it is executed. Co-incidentally, wallets are incentivized to an extent to route their order flow to the most dominant block builder in order to maximize the UX of their wallet (fast inclusion and predictably low tx fees). The centralizing effect of exclusive order flow access will get even stronger with the integration of PBS as builders will be more dependent on exclusive PFOF to compete in the block building race, and with the increase in modularity and complexity of decentralized sequencers doubly so.

Validators running multiple decentralized sequencers will be forced to buy the ‘best’ order flow for all chains/sequencers in order to be the builder of the most profitable blocks. To prevent this centralizing effect on block builders, the alternative could be to auction off individual or batches of transactions to bidders in a more level playing field, a concept which has been dubbed an order flow auction (OFA) but which functionally is the crypto-equivalent of PFOF. The TL;DR of OFAs is that transaction originators can solicit bids from (some set of) bidders for their transactions by disclosing (some amount of) information on it, with the winning bid being chosen based on some criteria.

While certain specific OFA implementations have been devised for trade execution (see DFlow, CowSwap, Hashflow, etc.), more generalized OFA implementations also warrant attention. Particularly, MEV-Share is an interesting protocol that could fill this need, by providing open access to searchers to users’ order flow through an OFA while returning MEV-able value back to the originator. The downside of the MEV-share implementation for now is the fact that the ‘matchmaker’ role, which is responsible for matching private user transactions with appropriate searcher bundles, initially is likely to be a ‘permissioned’ role run by Flashbots. This is needed to prevent competing matchmakers from engaging in bidding wars (thus again depleting value returned to transaction originators) and to ensure that bundles are only sent to trusted builders that will honour the ‘validity condition’ that accompanies each bundle and which posits that extracted MEV is returned to the user.

As mentioned previously, in a more modular future wherein an increasing amount of value will be derived from the cross-chain blockspace market, the pressure on block builders and validators to purchase exclusive order flow on ‘all’ chains increases to ensure maximum profitability (in order to capitalize on cross-chain MEV opportunities). At the same time, an abundance of blockspace may shift pricing power for this blockspace away from the blockspace producers to the transaction originators. Regardless, these approaches will decrease the amount of value that accrues to validators (and thus ‘decrease’ the security budget) as the proceeds of these (off-chain) auctions are returned to the transaction originator (or the order flow seller). This once more highlights the friction field that emerges around the value that can be captured on any set of chains; the value from this zero-sum game can increase the chain’s security budget, flow back to transaction originators, but not both. We’ll be covering this more in-depth, in the cross-domain MEV section as well.

So, where may this all end up going? Firstly, a key risk that ought to be mitigated is the MEV dystopia, i.e. the threat of vertical integration of key parts of the MEV supply chain spurred on by exclusive order flow (see this presentation for more). Metamask has already set Infura to be the default RPC provider, which is arguably fine for now, but a sliding scale where Infura would suddenly privatize their order flow or start building blocks isn’t out of the question. It is important that these types of parties remain aware of their dominant role and that observant third parties expose and denounce misuse of this position. Secondly, with dApps being significant originators of order flow and thus MEV, we’ll likely see more apps working toward designs that help internalize MEV, whether through app-chain designs, protocol-level choices, or their own exclusive deals. An interesting example of a protocol-level choice is Penumbra’s FBA (frequent batch swap), which basically arbitrages their pools internally by executing all swaps within a block in a single batch against a constant function market maker (CFMM) curve (think: Uniswap curve), thus using flow encryption to internalize their MEV. An example of a dApp creating their own exclusive deal has been Sushiwap integrating Manifold Finance for private RPC access with some additional guarantees in place for users. Finally, a ton of innovation is likely to happen within the OFA design space, with a particularly exciting domain being the effects and implications of the increasingly cross-chain and modular world on OFAs.

Relayer(s)

Another “actor” that is going to play a large part in the modular world, is that of relayers. Relayers are responsible for the transfers of data between different blockchain systems. They especially become relevant for sovereign rollups, and rollups that don’t share the same base layer (such as a liquidity settlement layer). Although, in the case of rollups that share the same DA layer, you could facilitate cross-chain swaps as long as the rollups respect the other's fork-choice rule, while running a light node on each other (to get state transition information). In this setup, you’re also still reliant on relayers to facilitate the passing of messages, which in most cases will probably be facilitated through a standardized transport layer (such as IBC or Polymer). As such, it is certainly relevant that we describe how relayers function, and how they are being incentivized. There’s also MEV to be extracted here, which we’ll go more in depth with in the cross-domain MEV part. Most of the use-cases of relayers currently (beyond facilitating token bridging and data communication) is for liquidity balancing across chains for both searchers and market makers a like. At least in terms of relayers in the IBC ecosystem. There’s also relayers in place for a lot of other cross-chain messaging protocols in other ecosystems, and relayers are also used often for off-chain order books, for example.

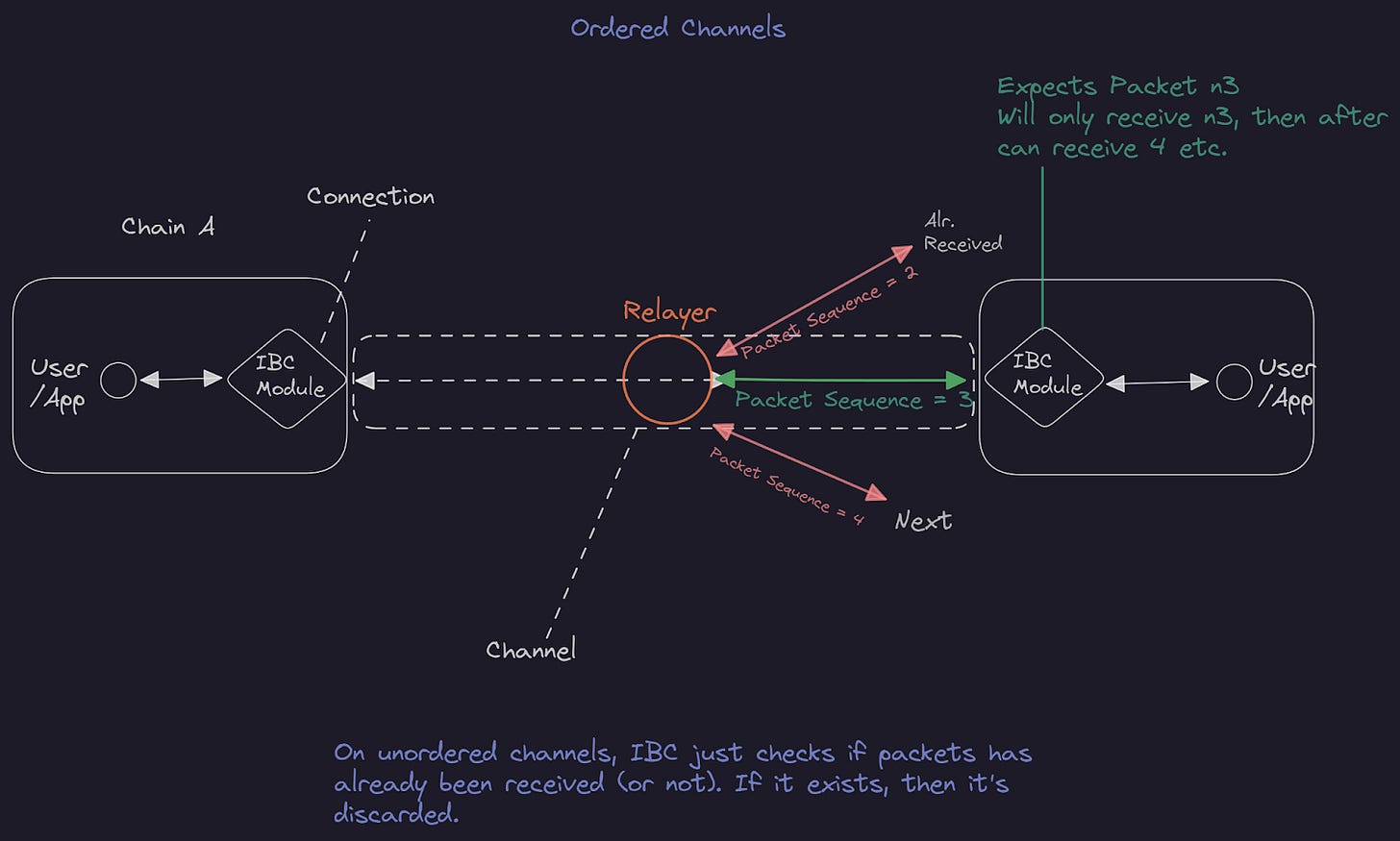

IBC relayers (such as Hermez) function within IBC to facilitate transfers of assets and data between different blockchain networks that have an open connection/channel with each other. They can profit by exploiting price differences between blockchains and by taking advantage of arbitrage opportunities between different DeFi protocols. Relayers can also extract profit by exploiting front-running opportunities. When sending packets through IBC channels, there are two main types of channels: ordered and unordered. Ordered channels ensure packets are delivered in the order they were sent, while unordered channels deliver packets in any order. Packet sequences enforce channel ordering. If a packet with an earlier sequence needs to be received before the current one, it is rejected until the correct packet is submitted. The IBC module guarantees that a packet will not be received on the destination chain if the timeout has passed.

So what can an entity that runs DeFi strategies and a relayer do? They can buy an asset on a protocol where it's underpriced and sell it on the protocol where it's overpriced, thus capturing the difference as profit. IBC relayers can also extract MEV by exploiting front-running opportunities, in the case that they have information about cross-domain swap taking place (for example in a cross-chain application such as Mars/Squid/Catalyst).

Something that might also be possible is the idea of Denial-of-Service (DoS) attacks on relayer packets, which could cause them to timeout (as described earlier). This could be used to front run a particularly juicy MEV opportunity cross-chain, since if we consider the ordering methods – you would be able to send your packet after a timeout.

The unordered channels currently would allow for easier incentivization of relayers, since you could have a separate bidding market for inclusion of packets to relayers, as it would no longer be a first-come-first-serve setup. This could, as it’s now more economically viable, lead to a larger and more decentralized relayer set (instead of them being essentially multi-sigs at this point). This would also allow for MEV to be extracted, and possibly be redistributed either to relayers or to the protocols of which they connect. However, a downside of this, is that it could heighten the congestion, and cost of participating in cross-chain transactions.

If you’re interested in looking at current relayer (w/ IBC) incentivization, you should take a look at the ICS-29 specification. The folks over at Polymer are also working on a few new specifications for relayer incentivization.

Another possible extraction opportunity for relayers is to synchronize blockspace between chains (and taking on inventory risk). Essentially acting as an off-chain social contract-enabled liquidity layer.

MEV Marketplaces

As we mentioned in part one of the saga, either the protocol itself has a built-in searcher-builder-validator/proposer (SBP) mechanic (preferably w/ PBS), or a third-party solution will pop up to provide that service. These third-party services also have the ability to potentially allow for off-chain social contracts between sequencers(rollups) that could allow for certain atomic and cross-rollup transactions. Thus, it is important to mention some of the projects that provide said marketplaces, and how they will fit into the modular stack as a whole. There are also some interesting integrations that could happen, through the DA layer for example as well. We’ll discuss this and more in this section as we explore the MEV marketplaces that will most likely play an essential role in how MEV is distributed in the future, as well as now.

One thing that’s exciting about MEV marketplaces, beyond setting up a proper MEV supply chain either in-protocol or off-chain, is the ability they have to facilitate social contracts between actors. Let’s think of an example of how MEV marketplaces could be used to facilitate various activities across domains in the modular stack.

You could negotiate social contracts (mayhap eventually on-chain enforceable ones) via MEV marketplaces to put in place multi-loop party auctions. Essentially, two sequencer sets (proposers) negotiate a market between them to enable cross-chain atomic swaps.

Here the visibility of what the swap entails is of utmost importance, to avoid mishaps.

You could also use these MEV marketplace to essentially get cross-chain synchrony guarantees between rollups that share a common security layer (such as a settlement or DA layer). Wherein the marketplace actors would batch transactions into the same block of the underlying layer to facilitate atomic transactions. This requires the block windows of said rollup sequencers to line up, unless you put in a time-lock to batch them at the same time (but this could possibly interfere with timeout settings).

You also have folks, such as Mekatek who are working on implementing a builder module for Cosmos SDK chains which would enable specific chains to express their own preferences regarding MEV, how it should be extracted and distributed. If you’re interested in how this works, I highly recommend you reach out to their team. As well as Skip, who have just recently implemented their Proto-Rev module for Osmosis.

Teams working on MEV solutions in the modular stack are primarily Mekatek, Bloxroute, Skip and Flashbots - in the democratization and marketplace vertical. However, there are also a myriad of other MEV projects working on parts of the stack, such as Blink, Manifold, and many others across various different ecosystems.

Modular MEV

Even though MEV in theory doesn’t change when you split up layers (execution is still in theory the same), it allows for optimization in the different layers to minimize the amount of MEV through things like different block building techniques, threshold encryption and so forth – depending on your application or general rollup. This is primarily because of the fact that in every single blockchain a leader is usually selected to propose a block, this means that the extractable value, or priority fees end up with that specific leader. One way to alleviate some of this is via the setup described in the incentivization part. Although, in theory, this is only masking the centralization. The thing about execution layers that are decoupled (which is all rollups, unless you use some “based”-esque sequencing and mempool model), is that they all siphon the MEV to them exclusively, while the leakage of MEV (beyond priority fees) is self-contained to the execution layer and very little slips downwards. The base consensus/da layer can’t actively do anything to stop the MEV from being extracted at the execution layer, unless we’re talking about data withholding, but with how the consensus is set up this would result in slashing.

For a Sovereign rollup, that self settles on the rollup side via validity or fraud proofs client side (while handling consensus, state transitions and fork-choice rules themselves) you would likely see exactly the same leadership setup that you would see in smart contract rollup. As with their counterparts, some of this MEV drips down to the DA layer via fees, but MEV is still self-contained to the searcher-builder-sequencer (validator) supply chain on the rollup itself. This is especially prudent with deterministic consensus algorithms, that are much faster to reach finality on the rollup side. The only way you’d in theory get MEV to drip down to the DA layer beyond da fees, is if you go with a first-come-first-serve type setup – where you rely on the DA/Consensus layer to decide what the canonical rollup chain is, since its validators would decide the ordering. Although, if this is decided by priority fees (such as with Celestia), you essentially just get a MEV-boost type setup where fees are king, unless you’re able to bypass this in terms of geographical latency and private order flow.

Now, in the case where an execution rollup utilizes a settlement layer (such as Astria, dYmension, Eclipse) where you rely on the settlement layer to post txs data to the DA layer (and settle state transitions and dispute resolution).

The setup depends entirely on the sequencing/block production rules and to whom you outsource this to (if at all). If you have a leadership election setup on the execution rollup, the MEV gets extracted there. If you have a first-come-first-serve, you’re dependent on the ordering of the transactions on the underlying layer.

However, you could also utilize the settlement layer for leader election (ala the Based setup, where you outsource sequencing to the “L1”), or depend on it for ordering if you utilize first-come-first-serve, while the settlement layer uses a leadership election setup. At the end of the day, it very much depends on the actual implementations of the rollup in question. Some of these predictions change if you utilize a shared sequencing setup, where the MEV of the execution layers are redistributed to the entire sequencing network. If that is the case, you might see the more popular execution layers push for a weighted redistribution, depending on how much “value” they provide in fees and MEV. Since if you don’t, they might view sharing their MEV as a negative, once they’ve reached maturity beyond the bootstrapping phase.

The total MEV of these shared layers are in general (beyond fees) the total amount of inefficiencies in the system caused by non-coordination between actors in the supply chain (liquidations, arbitrage, front-running, sandwiching). This, as we’ve seen on Ethereum rollups already, is a lot. In a modular world, our thesis is that the total MEV will grow even further due to more opportunities presented by a breaking down siloed monolithic environments into a diverse set of modular ones. We imagine a world where either MEV is likely going to be extracted entirely on the execution side (apart from fees), but also see great value in shared-layers coordinating to bring value to all the layers in a modular system (such as SUAVE-like implementations, or a restaking-esque solution, like the one presented by Espresso). These are primarily implementation details, but you could certainly imagine a world where you would pay “homage” to the layers you derive security from, beyond the baseline fees you pay for their services – depending on the matureness of your execution environment.

The main reason for why this separation of MEV to the execution layers is good, is that the cost of MEV extraction is siloed to execution environments, which also means that supply chain disruption is able to be contained much easier. This also means that the cost of MEV won’t affect the base layers with regard to congestion, and allows for much more specialized layers that are optimized for specific purposes (same reason for why we want PBS). Although, if the MEV “problem” becomes so great on a specific execution rollup, it might lead to even more layers getting added to solve the congestion and disruption issues that it brings. It’s really an equilibrium that is extremely hard to find. Especially since if you just split up layers continuously, the vast majority of financial activity is still going to happen on the execution layer’s side (since it’s the leader and decides on ordering+finality), which is where the MEV lies (whether that's benign or not). This is why we’re most likely going to see PBS and SBV/MEV supply chains either being built into the protocols themselves from the get-go, or move off-chain (such as with MEV-boost). This is to solve the PGA auction issues that come with a non-seperation.

Less so modular, but rather app-specific, is the fact that various MEV minimization techniques work better when they’re contained and tailored to a specific application – such as with Shutter network (shielded mempool), or threshold encryption with Osmosis. This runs back to our application-specific thesis, in which our argument is that app-chains allow for better optimizations, both in resource pricing and tech choices, but also MEV minimization techniques – although at the cost of composability. However, things like Polymer (and IBC), alongside ICQs and IAs are set to change this.

Although we argue that the security budget is important for decentralized networks, it all ties back to where do you want the pain to land. If you value security budget, and you're willing to sacrifice end-user execution for MEV to be extractable to increase security, you would aim to find an equilibrium. If you, on the other hand, value MEV minimization more and are willing to have the pain of issuance land on token holders, a MEV minimization technique makes more sense.

What a shared security layer provides, is also the ability to lower the amount of issuance, since you're able to inherit security partly. This is also why we believe most modular chains will eventually revert to a few select, and highly optimized and secure DA/Consensus layers. It is at least a good starting point, for any new chains. And you could argue that the cost of leaving this shared layer is higher than what you stand to gain. This is in the same vein as why a shared sequencer network might make sense for execution rollups.

For an application-specific rollup with a controllable segregated execution environment, there are also some unique methods for providing better transaction execution. We have of course already covered some of these, such as threshold encryption, time lock puzzles, but another way is to change sequencing rules. What you could do is to require that the sequencer(s) (the builder and proposer) are constrained to a sequencing rule (a uniqueness to rollups, building on top of existing layers) that requires them to only execute swaps in the same direction if the price is better than what was available at the top of the block, or if there are no swaps in the opposite direction following their trade. This means that if someone wants to make a trade in a certain direction, they must ensure that the price is better than the price at the top of the block or that their trade won't be used to move the price for someone else. By following this rule, users can be assured that they are receiving a fair price and that their trades are not being used to benefit others.

While this sequencing rule ensures that users receive a fair price, it's important to note that there are still less favourable orderings that users can pay for. Additionally, being in the long tail of same-direction swaps at the end isn't desirable either. However, this rule still leaves room for multi-block twap MEV strategies that are more sophisticated. When it comes to more complicated orders like arbitrages touching multiple pools, the sequencing rule may not be sufficient to guarantee a fair price. If you’re interested in reading more about this particular setup, there’s a great post on the Flashbots’ forum that goes more in-depth.

Cross-domain MEV

The best way to describe cross-domain/chain MEV is that if transactions on one chain start effects on another chain, which is then capturable on that other chain. This MEV is then capturable on chain B, even though the activity or transaction originated from chain A.

For transactions on separate chains, there’s obviously MEV associated with them on the individual chains they inhabit. Now, couple that with a bridge swap taking place on a cross-chain AMM/DEX, and the MEV problem suddenly becomes a cross-domain issue. If someone, for example, sees a swap/transactions happening on Chain A, which indicates that they will likely handle a transaction to a certain pool on Chain B. A searcher, with liquidity on Chain B now has as much time as the latency is for the bridging to take place to essentially front run or similar.

There are various ways to hide some of this public data, which we’ll get into in a bit. However, essentially, you’re still being “MEV’ed” in the same ways as you were previously – depending on the setup of the particular chains in question. This is especially true in synchronous setups, while asynchronous setups (such as Catalyst for example), might yield better protection against hostile actors (that could inhabit a TTP state chain). State synchronous setups in general also provide pretty bad pricing for pools, however state proofs (of which several companies are working on) are definitely set to help alleviate some of this (but at the cost of some overhead or even centralization). As soon as you’re handling transactions on numerous different chains at the same time, MEV opportunities arise in a cross-domain fashion. Pricing here is especially important, and projects like Lagrange, Axiom and Herodotus are probably going to be able to provide much clearer (and trust-minimized) pricing for cross-domain on-chain activities. However, what should still be taken into account is the manipulation of price feeds, for more information on how protection could possible look here, I highly recommend looking at Consolidated Price Feeds.

Another key factor of cross-chain applications that also affects cross-domain MEV is the fact that on applications that have “multichain” pools, as in – I can deposit funds on chain X, while borrowing on chain Y. You essentially introduce differences in pool sizes across the various pools and chains. This makes life quite a bit harder, especially if liquidity of a certain asset is much lower on a specific chain, but still supposed to hold the same price points across the different chains. You need extremely strong consolidated price feeds models, or state proofs showing prices from the chain with the strongest liquidity – to avoid problems that come with price exploitation on lower liquidity chains. This also means you, in most cases, on cross-chain lending protocols will have to decrease the Loan-to-Value (LTV) of the cross-chain pools, unless you’re absolutely certain that you’re getting trust-minimized and proper price feeds from the strongest liquidity chain. This does introduce some extra risk parameters, especially on more complex protocols. In situations where you have an asynchronous cross-chain AMM with the same amount of liquidity in each pool, this becomes less of an issue as long as your liquidity pools are deep enough (and provide proper slippage information). However, you’re still going to be running into cross-domain MEV extraction opportunities on different chains. This, as said, can be lowered by hiding vital information (such as with time lock puzzles, encryption and so on) – however, there’s always going to be some information leaking which could affect your protocol.

What this does mean is that (at least in the cross-chain AMM example) is that you get fragmented liquidity instances (although connected), which is a lot less capital efficient. Especially as the amount of chains support grows, since you’re now required to spread out X amount of liquidity to Y amount of chains. Essentially, arbitrage between the pools is what’s going to synchronize them, but the capital efficiency still remains quite low. The issue is easier to handle, if you do just stablecoin pools (such as with Stargate), but if you hardcode assets to $1 and a certain stable loses its peg, then you’re in for a world of trouble (and a lot of MEV). Replicated instances of liquidity becomes much easier to maintain and handle if your entire ecosystem of chains connected are standardized, such as with IBC and its interchain standard (ICS-20). Which can mean moving funds much easier, and not relying on “wrapped” assets that are incompatible with non-wrapped assets. This can be made more efficient by having off-chain agreements for atomic transactions (but adds extra trust assumptions), or via a shared DA layer.

Now, as we’ve mentioned earlier in the section, the ability to avoid or minimize some of this cross-domain MEV extraction is interesting. However, most current solution to this, are going to add latency to the system – is this a trade-off the end-user is willing to make? Unless you’re extremely aware of the negative externalities that MEV can bring, then most users are probably not going to care. However, in the edge cases of power users that are moving large amounts of funds, this definitely becomes more fascinating. You could utilize ZK-Timelock puzzles, and encryption.

This allows you to send the finalized transaction commitments to pools, and any watcher would be able to challenge operators (the trusted third party) that did not execute the committed to finalized order of transactions, wherein they would lose their bond. The operators could also be run on an SGX machine, for example. However, this would add significant overhead, and possible latency.

Another area of research that is quite interesting, and something that we looked at slightly in the transaction originators section, is that of order flow auction systems. However, let’s try to look at this from the lens of a cross-domain position.

Something that’s potentially appealing to explore is the idea of integrating MEV marketplaces, to facilitate private order flow auctions via specific transport layers. This would allow certain actors to take on inventory risks (via a fee), and provide certain privacy guarantees and fast, to atomic transactions (which are much easier to do with 1-1 assets, than swaps that depend on variable pricing). You could essentially have the transport layers integrate with block builders on connected chains, and as such optimize MEV (and it’s redistribution).

This should, we hope, be done in a way that’s permissionless, and have similarities to the same ideals that Flashbots and others hold themselves to when it comes to building off-chain MEV marketplaces. The idea of an order flow system is to provide much faster execution (and eventual finality) for cross-domain transactions. This should also tie into incentivization of pivotal actors (such as relayers). Currently, most relayers aren’t running them for economical profit, since they operate at a loss for the most part (and many of them funded by grants and public goods from the chain or application’s governance).

This also opens up another cross-domain MEV avenue, which is the access to exclusive blockspace markets via direct access (through integrations) to the block builders (in a modular world, the sequencers). This allows for searchers, that want to maximize their MEV profits, to integrate into cross-domain MEV marketplaces, that still allows the MEV to primarily land in the hands of the validators of the networks (and as a result the token holders).

However, the negative externalities of this, is similar to the problem that we already see in singular staking networks. Which is the centralization of stake. For example, sequencers (essentially the rollups validators) that run sequencers on several other chains are able to commit to extremely valuable cross-domain MEV activities if they’re the leader of two simultaneous proposed blocks. What can help limit some of the externalities of this, is shared sequencing setups for rollups - such as the shared sequencer network that Astria is working on, as well as projects like Suave and Espresso. We will cover shared sequencing more in-depth in a later article. One thing that’s also relevant, and I hope we see more research in this area, is the information asymmetry that comes with introducing so many actors into the system, all of which have different latency of information leakage, depending on where they sit in the system flow.

As we move into a modular future, where blockspace becomes more and more abundant, there’s now less “worry” about being able to be included in a block – and instead much of the MEV (such as cross-domain order flow) could actually be extracted via transaction originators (as presented earlier) alongside integrations with the block builders (and proposers) themselves. Something that’s also an apparent “issue” is that of rollup sequencer networks outbidding/spamming blockspace on the underlying DA layer, to extract valuable MEV opportunities in the stack (we’ll cover this in more nuance in the next section). This obviously becomes increasingly expensive as blockspace grows on the underlying layer (such as with DAS), and might only be possible in short stints (if at all).

One thing we also mentioned earlier is that there’s way more opportunities for well capitalized MEV extractors in a modular world, since liquidity is a now way more fragmented (and bridging often not atomic, unless specific criteria are met on the shared DA layer, or via social contracts). This could see large (in terms of capital) actors become increasingly wealthy, and drive centralization. If you want a more technical deep-dive on various aspects of cross-domain MEV, I will also recommend the cross-domain MEV paper by members of Flashbots, the EF, Gauntlet and Socket.

Just like with Ethereum’s crLists, we hope that cross-domain sequencing and blockspace markets also take a similar approach to censorship resistance – and therefore also integrate models like crLists (force builders to include a transaction inside a block). This is also what gives the ability for block builders to provide a soft-guarantee, that a users' transaction will eventually be finalized. Where this could possibly become an issue is for block builders that are geographically located in jurisdictions that don’t want certain actors to commit transactions (such as with OFAC), which could lead them to block certain transactions (and not adhere to crLists).

Another quintessential aspect of cross-domain MEV, is that of benign arbitrage MEV that takes advantage of differences in prices across various DEX on different chains that carry the same assets. We won't go into too much detail on this, this is' a well established topic. If you’re interested in seeing cross-domain MEV happening in real-time, I highly recommend you check out the Odos’ tracker.

There are also some interesting implementation of cross-domain consensus that is in the works by the team over at Anoma. If you want to read more about their specific solution, you can check out their Paxos Consensus paper here.

DA MEV

Another component of the “modular stack” is the DA/Consensus layer. It is the lego block on top of which other components are built. A DA layer is usually seen as a lazy blockchain that does not differentiate between what data is posted on it. A rollup posting data is competing with a user that wants to inscript their NFT needs to be stored on-chain (Ordinals). This is a very pure marketplace, where you can pay in bytes made available and the preference is shown by willingness to pay a certain price. Those that pay most are to be included, as both miners and validators have to optimize for maximizing revenue due to the economics of their business. While this seems trivial and most readers will know this, it is important to know that pricing will determine whether your DA is included in a block. Especially if the fact that being included or not impacts the leadership rule of various parts of the stack. This is what gives the DA layer pricing power, or the other way around, others will be willing to pay up to make sure they are included and decide the ordering. The amount they are willing to pay depends on the MEV possible to be extracted (and the tx fees) and consequently this will mean extractable MEV will trickle down to the DA layer.

There are a few setups in which the DA layer has pricing power to extract more value from rollups. First, you have could have (sovereign) rollups that rely on the DA layer for their sequencing. Here it becomes critical to get the rollup data on the DA layer prior to others as it directly impacts who dictate the state (e.g. the ordering of transactions) on the rollup. In such a scenario, we would envision most MEV to trickle down to the DA layer. The second scenario is one in which there is a rollup with a settlement layer, here the MEV will mostly be captured on the DA layer in the case that the settlement layer relies on posting to the DA layer for leadership election. The third scenario is still a somewhat more theoretical one, this relates to potential decentralized sequencer setups. You could envision a decentralized sequencer setup that results in proposers on the decentralized sequencer level competing to be able to post to the DA layer (or pricing out competition to do so). The point to which this matters will be a function of various parameters in the leadership election used, e.g. if the timeout parameter, as described in our application-specific chain thesis, is relatively short it is key for proposers on the decentralized sequencer level to post data as quickly as possible. In such a world, you could get competition of “validators” of the decentralized sequencer set competing to act as a leader and outbidding each other for blockspace on the DA layer up to a point at which it is no longer economically desirable to do so.

A way for the rollups on top of a DA layer to maintain censorship resistance is requiring sequencers to stake a certain amount on an L2 and vouch for an L1 address with sequencing rights. Only transactions from addresses with sequencing rights will be processed, and invalid transactions will result in the sequencer's stake being slashed.

Another way to view this is that in “based” sequencing (using the DA layer directly for sequencing) the DA layer has pricing power to extract MEV.

An important note here is, whether it's a good or a bad thing to have MEV be extractable by the DA layer. As in our first piece, you basically have two points of view here, one is the security budget. If MEV is extractable at the DA layer, consequently the value of the DA layer blockspace becomes more valuable. This makes the token, which gives you the right to sell future blockspace, more valuable. Consequently, the value at stake, is larger and the security budget of a chain becomes larger. The counterargument is that if MEV is extractable at the DA layer, the pricing variation caused by MEV on one rollup will impact all others interacting with the DA layer. This would be worse for protocols using the DA layer as pricing is less consistent and reliable. It is likely that this will end up in a situation without an optimum, and we are mostly curious to see how it plays out with the various shapes and forms of modular designs coming out in the next few years.

As we presented earlier, if the leadership election and MEV extraction happens on the execution layer itself, most (if not all, apart from DA fees) of the MEV will be extracted on the rollup.

Future Discussion

This section will serve as an appetizer into some interesting thoughts and research that deserves more attention, and possible implementations. It's less coherent, and more just a gathering of things we find intriguing, with regard to MEV in the modular stack.

MEV marketplace integrations into modular stack through DA block submission and sequencer APIs for 3rd party market swaps - PFOF

Mempool encryption by Jon Charbonneau and MEV findings on Osmosis (using threshold encryption) by Mekatek

Restaking for validators to engage in block sequencing on L2s. Is it worth it from a slashing properties' perspective? Enough yield for validators to run more runtimes? This is the same reason for why the Cosmos Hub’s current implementation of ICS might not be worth it from an overhead perspective. Especially if the supported chain has low activity.

Effects of latency on MEV, we've already covered this slightly in regard to the first-come-first-serve mechanism (and it's effect on Arbitrum). As well, as current and past geographical centralization of the MEV supply chain. But would be interested in seeing how this looks in a more “modular” stack.

As has probably become clear over the series, is that you're making trade-offs at every level, and it's really up to the developers of rollups to decide how they decide on what trade-offs to make. We hope that this article has helped somewhat with identifying which trade-offs are important to keep in mind, in regard to MEV, security budgets and decentralization.

If you’re building in the modular space, and interested in discussing parts of the article in more detail, feel free to reach out to us on Twitter – we’re here to help you make the design decisions that will be best for your end-users.

Thanks to Pim Swart and Mathijs van Esch for contributing to parts of the article, as well as to the many reviewers, such as Mekatek, Josh Bowen etc. Also, a big shout-out to the many folks that I got to discuss MEV with over the course of the last few months, particularly in Denver.

Next up in the modular saga, we’re exploring shared sequencing, and all the derivatives of this that come with shared-layers. We’ll also look at some of the effects this can have on MEV, especially in a shared sequencing world, as well as on SUAVE-like setups.