Horatius At The Bridge

A Comprehensive Overview of Security and Trust Assumptions in Blockchain Bridges

A well-known legend of early Rome describes Horatius Cocles almost single-handedly defending a bridge against Etruscan aggressors.

Over the last couple of months, we have seen token bridges be targeted by increasingly elaborate exploits. This has prompted several people to talk about the actual security of bridges. As such, we would like to present a comprehensive overview of security and trust assumptions in blockchain bridges. This will provide a complete overview of most messaging protocols, bridges and interoperability projects. Furthermore, we will also take a look at the economics of cross-chain messaging protocols and bridging, since this is also extremely crucial if the token of the protocol enables you to take governmental control over it.

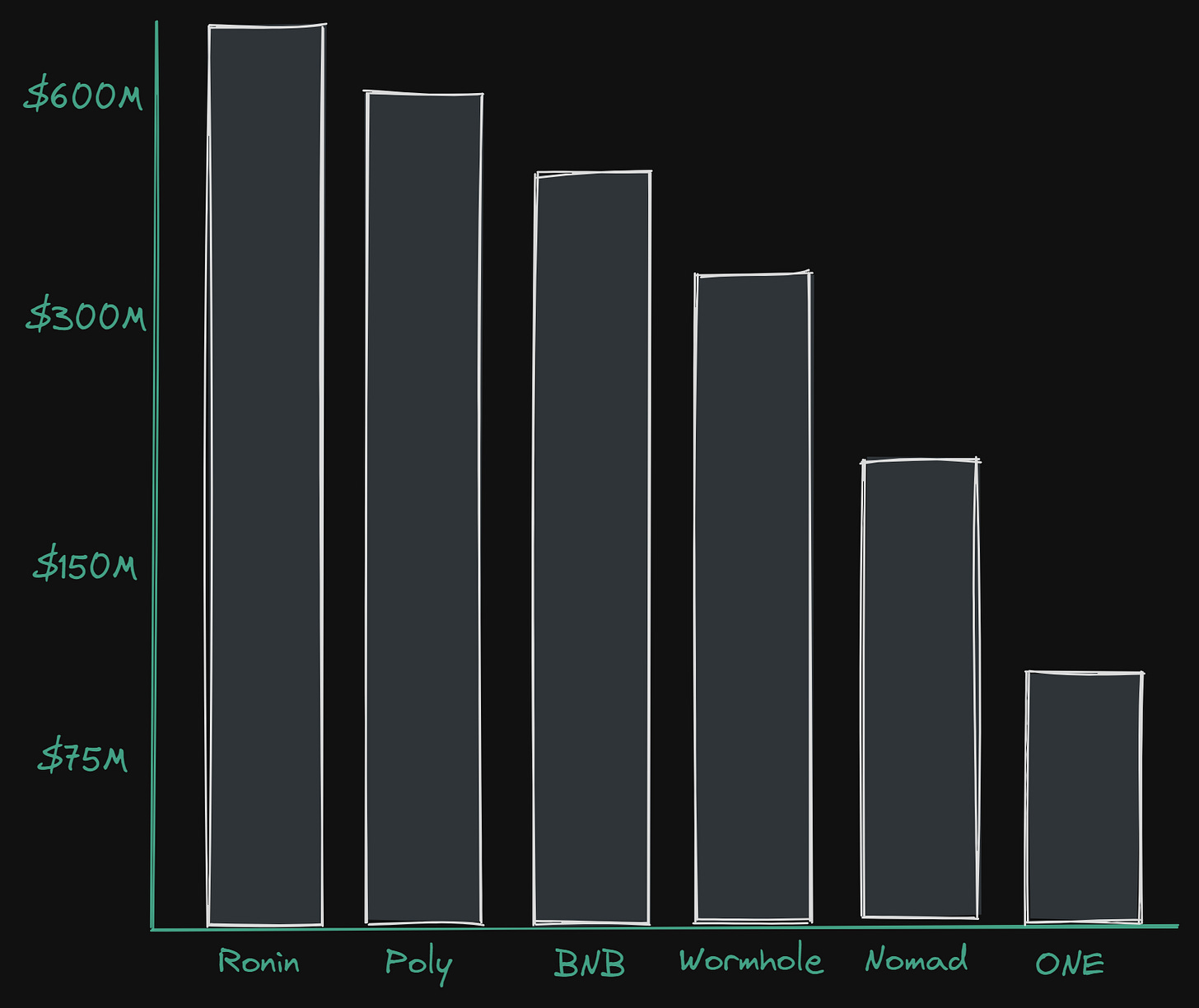

First off, let’s establish just how bad the problem has become in regard to exploits happening on bridge-related protocols.

As can clearly be seen, the exploits of bridges have been a huge issue as the number of chains in existence grow. This is only set to become a more systematic risk unless we prioritise security, and we as users need to be at the forefront of holding protocols responsible - it’s our funds at the end of the day. This is also why we are writing this article—to focus on trust assumptions and security in the interoperability space.

Likewise, as a result of the industry becoming increasingly multichain, app-chain and modularly focused we are also seeing a large influx of new blockchains and layers. To accommodate users and liquidity, interoperability is needed. As such, we've seen the importance and popularity of bridges, in particular token bridges, become increasingly popular as more and more blockchains start to build out their own ecosystems. As a result of this, there's a demand for liquidity to be moved from one chain to another. Hence the popularity of token bridges. Although this is not the only use-case of bridges. Here we are specifically referring to general messaging protocols, IBC for example, which enables a myriad of use cases through its messaging system and standards.

An excellent overview of how many assets are bridged across various chains can be seen here (s/o to CryptoFlows.info - creators are also behind other great sites such as L2Fees, CryptoFees):

This clearly shows the demand for bridges and highlights why it’s so incredibly vital that we push for security when it comes to cross-chain messaging protocols and bridges - because they handle tremendous amounts of value, both in regards to volume and are often also custodial in many cases controlled by multisig smart contracts.

In most bridge applications and cross-chain messaging protocols, there is a range of actions that contribute to its efficacy. These are primarily seen underneath. Although, they depend on the particular bridge/messaging protocol in question:

Fraud Watcher/Monitoring: State monitors such as light clients, validators or oracles.

Relayers: Takes care of message passing/relaying such as a relayer (usually just a messenger, not a consensus participant), taking information from source to destination chain which operates off-chain.

Consensus: Agreement between actors monitoring the chains, this could be in the form of a trusted third-party valset, such as with Axelar or an off-chain network of consensus nodes, or even a multi-sig.

Signatures: Bridging actors cryptographically sign messages, this could be validators signing a cryptographic signature such as with IBC, or through a ZKP (such as Plonky2) with Polymer that attests to the validator signatures from the source chain.

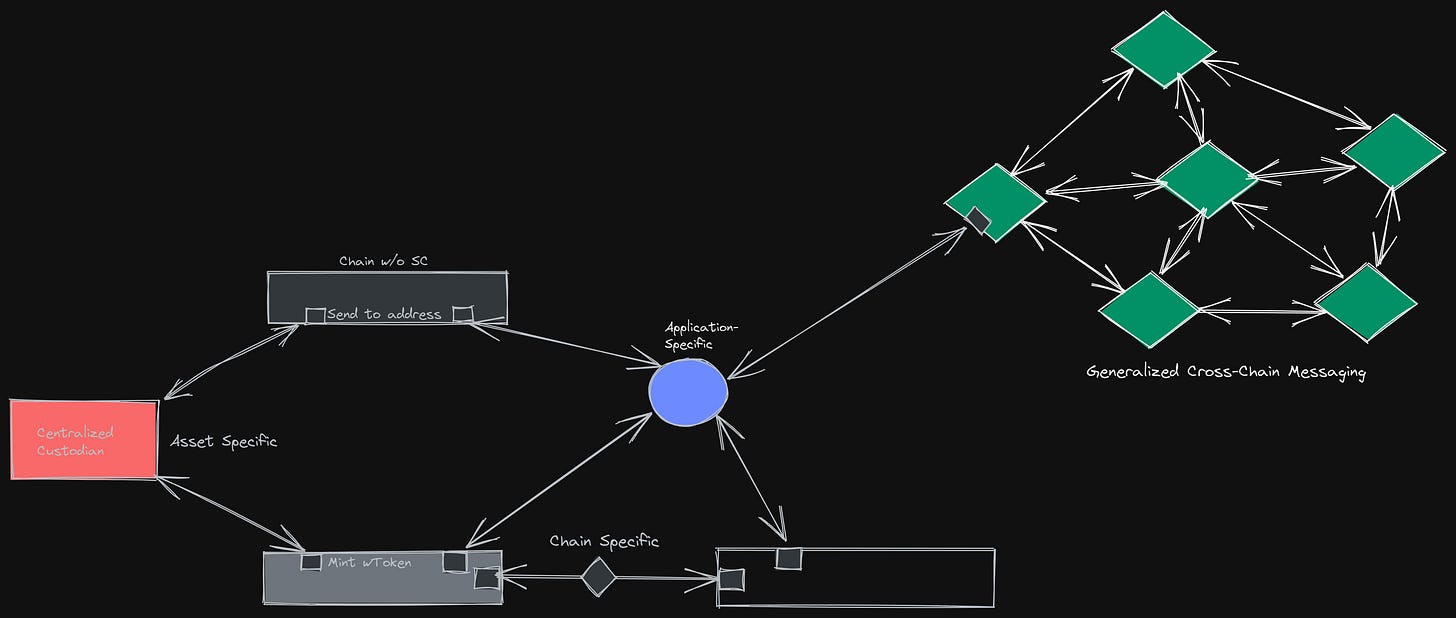

You can then categorise these protocols into four major types:

Custodial Bridges (often asset-specific, but you could even say exchanges are custodial bridges): Providing wrapped assets that are collateralized in a custodial (through a third party) or non-custodial (smart contract) way. An example of this type of protocol is wBTC with a centralised merchant like BitGo which then mints an equivalent ERC-20 token (wBTC on Ethereum). We will get into the trust assumptions and risks of this type of solution later, but some of them should be quite clear just by this explanation alone.

Multisig Chain-specific: These are usually two-way bridges between two chains such as the Harmony Bridge, Avalanche Bridge and Rainbow Bridge, all connecting their respective chains to Ethereum via a smart contract on Ethereum. Users typically send an asset to the protocol, where it is held as collateral, and are issued a bridge-wrapped token on the destination chain, backed by that collateral locked in the contract on the source chain. These are often protected by a set number of validators or even just a multi-sig. We've seen how these solutions have proven prone to risk - namely with Ronin Bridge and recently the Harmony bridge.

Application-specific: An application that provides access to several chains, but is used just for that particular application, for example, Thorchain, which also operates its own chain with a separate validator set. The trust assumption with these often lies within a single validator set that controls and handles all messages and transactions across the network that connects to different smart contracts/addresses on various chains.

Generalised Cross-Chain Messaging: Cross-chain messaging protocols that allow for generalized messaging across chains with set instructions, such as IBC. These are usually dependent on validator sets. Here the trust lies within the two chains’ validator sets that are connected. With XCM, the trust lies with the Relay Chain (Polkadot). You could also add messaging protocols such as Polymer and LayerZero, that are both agnostic.

Cross-Chain Messaging protocols are often used in conjunction with liquidity layers since they often only handle communication and standards between chains based on their specifications. A great example of this is Superform which uses both LayerZero and Socket (as a liquidity layer). You could also view separate Cosmos-based chains as liquidity hubs for IBC as well.

This next section will serve to cover two of the vital parts of cross-chain protocols, namely the smart contract risks involved and the additional trust assumptions that need to be accounted for.

Smart Contract Risks

One of the first and most obvious security risks to cover is that of smart contracts. Smart contracts are used in most cross-chain bridges that don’t have a native generalized messaging protocol, therefore, it is of vital importance that they’re secure. So let’s cover some of the risks that need to be addressed here. The most effective way to avoid those risks is by omitting the usage of smart contracts altogether and instead relying on the security of the chains in question.

Correct Statements for calling contracts

What functions rely on predefined conditions before execution is possible

Assert conditions to check for things that need to hold true for all function executions, such as balances of bridge liquidity contracts

Revert statements that are triggerable if required conditions aren’t met, such as those previously discussed.

Formal verification and rigorous testing

Formal verification of contracts is important to ensure the correctness of said smart contracts under set conditions. Does the math work out? Does it work according to the specifications I need it to adhere to?

Testing all functionalities of contracts - for every update or change made.

Test randomness to ensure that if security properties are violated that the functions hold true.

There are various testing methods that can be used such as - Unit testing, static and dynamic analysis and much more. Get in touch with folks like Runtime Verification, if you want to discuss what you can do.

Upgradeability vs Non-Upgradeable

Should your contract be immutable and non-upgradeable? This obviously ensures that “malicious actors” can’t cause problems. However, it lowers the ability for innovation. A new contract and a migration to it would be needed, which would need to go through the many checkpoints we’ve discussed previously. Another downside is, what if the contract has flaws that are now unable to be addressed and it simply lives until it gets exploited? Although, the beauty of being non-upgradeable is the fact that it limits exploits in the case that the contract is steadfast, and if an update is made without the proper security measures to an upgradeable contract it can lead to faults - as seen before.

However, if you do go with upgradeability, which in most cases is the correct option there are various ways to ensure that you adhere to minimise trust and maximise security:

Prepare for failure - what upgrades are needed if an exploit takes place, can I enact emergency measures?

Utilise proxy patterns - splitting up state (info) and logic (execution) between separate contracts. This means that you could upgrade the logic of the smart contract (with a new one) without messing with the state itself.

Emergency Stops

Have entities that have access to an emergency stop function which close accessibility to functions within the smart contract. This should only be used in very significant situations where it is needed to stop an exploit. This does however put power in the hands of the few, but it can sometimes help to safeguard functions in contracts in the case of exploits. This is a good method and idea to implement alongside the next point.

Monitoring (Watchers)

Active monitoring of smart contract functions and execution is extremely important to ensure liveness and correct tracing. You can also utilise various monitoring tools to get alerts on certain triggers or functions which are important to the liveness and safety of the contract.

One way to add to this is to have incentivised watchers (such as with Optimistic Rollups) that have incentivisation to catch fraudulent activity and report it. However, this needs to be implemented in a way where it will actually have an effect, there are more pressing matters than having watchers.

Governance

In the case that control of your smart contracts is decentralised and given over to the token holders of your protocol there needs to be certainty in the security of governance systems.

Things to consider are large token holders able to upgrade the contract and change functions in their favour, which could lead to malicious exploits causing failures and loss of funds - something that has been seen previously.

A good method to consider is Optimistic Approval which is a method to add timelocks and a veto process to allow for aligning incentives and limiting power to certain actors and helps to avoid malicious proposals.

This also ties into using timelocks to prevent smart contracts from executing functions that are integral to the system until a certain amount of time has passed, which can allow emergency functions to step in if needed.

TWAP voting could also be used to reduce quick governance voting takeovers.

A decentralised governance system can be very useful to allow community members to have a say in the future of the protocol. However, it does add risk and trust assumptions.

Access Controls

Specific functions of contracts need to be addressed as to who can call them. Should all EoAs be allowed to call functions? Should functions be public or private? Should only functions be called within the smart contract etc… Some functions should only be accessed by some actors, such as upgradeability, or minting tokens.

Who’s the owner, and what functions are they able to execute - does it lie solely with a single owner or a multi-sig? Often separate roles can access part of the functions to limit centralisation. Regardless, you should focus on eliminating single points of failure and minimise trust - focus on minimising, you can never remove trust.

Reentry

Sequence numbers, timestamps or cryptographic proofs to ensure valid identification of contract calls to avoid double dipping.

Oracle Manipulation (Consolidated Oracles)

Another important thing for smart contracts that depend on price feeds or similar information from oracles is to have secure methods for identifying manipulation and limiting what is possible from an outside perspective.

A great research paper to read on this is the Consolidated Price Feeds which lays out ways to ensure security and minimise trust when using oracles.

As may have been clear from the above there are a lot of trade-offs involved when it comes to smart contract risks. We hope that with the above list the majority of risks involved are shown and users and developers alike can start assessing those risks.

It should also be noted that even though some Cross-Chain Messaging Protocols do not rely on “smart contracts” per say, they still rely on code and protocol design that needs to be extensively audited and tested. This should go without saying, but it is still important to note. They should also be updated in line with many of the same ideas discussed too. A somewhat recent example of a non smart contract bug that resulted in an exploit is that of the BNB exploit, which was a result of out-dated code in an old IBC spec, that wasn’t kept up to date.

Trust Assumptions

Another important part to cover is the trust assumption within the specific protocol that is being used. This is incredibly vital in the case of something like wBTC which is handled by a centralized custodian. It’s also relevant even in the case of roll-up contracts that act as bridge smart contracts for their specific layer 2. Often they’re controlled by a multi-sig or similar and in many cases are even upgradeable. In some cases the trust assumptions are small, in others, they’re quite large. However, what’s important to note is that in any bridge/messaging protocol there are trust assumptions to some extent, and there always will be. Even in the case of smart contract-free messaging protocols like IBC. In those cases, you’re trusting the validator sets of the connected chains. In other cases where there’s a trusted third party which operates the bridging protocol, you’re trusting that validator set primarily, such as Axelar and Polkadot. In those cases, you’re also often dependent on smart contracts that, at least in Axelar’s case, act as custodians of the funds being bridged to Axelar.

There Are Always Trust Assumptions

There are ways to solve some of these trust assumptions, such as relying on ZKPs to verify the correctness of block headers on-chain. However, there’s still a trust assumption that the block header is correct from the source chain (and its valset), and as such saying there aren’t trust assumptions involved is plain wrong. You’re also dependent on the verifier contract on-chain being correct, and as such open-sourced contracts should always be preferred and be subject to testing and formal verification. What this does prevent is relying on external trust assumptions, the less of these involved, the better. While we’ll never reach trustlessness, we can get relatively close and reach practical trustlessness which is the point where the trust lies solely within a decentralized network of sufficient cryptoeconomic accord. And by accord, what is meant is that the cost of an attack would far outweigh the gain. Here it is also vital to consider that it’s in most network actors’ interest to ensure the long-term health of the network for their own gain. This leads us to the ever-constant saying of - and + EV and the role of Moloch, and the ability for us as a community to coordinate together on and off-chain to fight off irrational actors.

Disparity in Trust

Although one thing that is vital to understand is that there's a disparity in trust. The trust system varies from one blockchain to the next. Transferring data from one blockchain to another that has a greater or lesser cryptoeconomic security could result in malicious actions.

Multi-sig wallets require multiple signatures from different parties in order to authorize a transaction, making it much more difficult for a single entity to compromise the security of the system. Decentralized governance, on the other hand, allows for the collective decision-making of a community of users, making it harder for a single entity to gain control of the system. However, each multi-sig setup will be different from the next, offering various amounts of security and trust assumptions. The same goes for the case of decentralised governance. Is there sufficient decentralisation in the token holders, do they have a governance implementation that favours security - for example, Optimistic Approval? There are several factors that are important to take into consideration, and often vary from protocol to protocol

Reentrance and Liquidity

Another key thing that we covered slightly earlier is "replay/reentrance attacks." In a replay attack, an attacker broadcasts a previously recorded transaction on one blockchain to another, potentially allowing them to spend the same funds or assets multiple times. The obvious way to avoid this is obviously the use of light clients to cryptographically prove that said transactions have been done on each chain. However, that is not possible on every chain. The clearest example of this type of exploit happening recently is with Nomad. Another option is to use sequence numbers or timestamps to ensure that each transaction has a unique identifier that cannot be reused - randomness is an important thing here as well.

One thing to also consider is where the liquidity on a specific asset lies. For example, is it in a source chain liquidity contract? Is it like certain USD stablecoins where the liquidity is actually off-chain? There are a lot of trust assumptions to consider. We should aim to minimise trust assumptions, but we can never remove them completely.

Now that we’re established some of the significant things to look out for when you’re bridging your treasured assets, just wanting to query data or sharing state - let’s understand why bridging assets and state are vital.

Data movement and state sharing is the next step in enabling true composability across chains. It allows applications to extend beyond the boundaries of chains and built protocols in a chain-agnostic way. With this, a module, account or smart contract on chain A can call or read the state of a smart contract, account or module on chain B. A great example of this happening is through the ICQ module within IBC. It is correspondingly vital to ensure the security of assets being bridged around to various chains, as we and many others foresee a future where many chains, hopefully, co-exist.

General Analysis of Cross-Chain Messaging Protocols

This section will serve as a guide to basically every single cross-chain bridging and messaging protocol in existence. It will cover their various security measures and where their trust assumptions lie. If we missed a certain protocol, then we do apologize. But, this section should still cover the vast majority of protocols that exist, and will even go into specific bridge contracts such as L2 bridge contracts as well.

We’ve already gone over how IBC works quite in-depth in previous articles such as this one, and this one. If you’re interested in reading up on them, I recommend you do so. What’s important to underline though is that the primary trust assumption within them lies in the validator set of the chains that have opened a channel. There are no smart contract risks, but rather the trust in the valset and their state.

As we covered in our Polymer article, there are various derivatives of IBC. By this, we mean cross-chain messaging protocols that utilise IBC in some way or another. This first section will cover such protocols. Namely Polymer and Composable, we’ve already done a comprehensive deep dive into Polymer, so we’ll keep that part short as you should already be quite well acquainted by now. It is also important to note that we are investors in Polymer and Composable.

IBC Routing protocol, is more of a generalized cross-chain messaging protocol than a bridge

Light Clients or Smart Contracts acting as a light client verifying block headers (signatures from validators)

Cryptographic proofs through validator signatures from validator sets - ZKP on non-Cosmos chains, via recursion (plonky2) and on-chain verification - their zkIBC implementation.

IBC logic in smart contracts

Can offer asynchronous bridging that relies on valsets of connected chains.

Enables data sharing and query. Not just an asset bridge.

Synchrony commits on the source chain (validator signatures), finality once block headers are checked on the destination chain.

Cryptoeconomic security is the stake of the connected chains valsets with IBC, and for non-IBC based chains the trust assumption lies in the smart contracts deployed.

XCVM operates similarly to how Polymer operates. By this, we mean the ability to deploy native cross-chain protocols. So the sharing of state, query ability and such.

Centauri is their transport layer which is what facilitates the actual communication. This is based on IBC and allows for state sharing as well. It basically allows the bridging from IBC chains through their Parachain, which is connected to its respective Relay Chain.

Operates with light clients as with IBC and a rust implementation of IBC to support the Polkadot ecosystem (Substrate). Light Clients or Smart Contracts/Pallets acting as light clients verifying block headers (signatures from validators)

In this case, the trust assumption moves from two singular valsets to the valset of the Relay chain in question (Polkadot or Kusama) which operates the state of the Parachain being bridged to, from a chain that utilises IBC. This is through an IBC pallet (similar to the Polymer IBC contracts in Solidity on say Ethereum) which implements the IBC framework needed on their Parachain.

Watcher nodes to verify block validity in case of attacks on chains.

For chains with native light client support, the process is quite similar to how IBC works natively.

The next section will serve to explain how XCMP works, which is the native cross-chain messaging protocol of the Polkadot ecosystem. This is a natural follow-up to Composable since they indeed originate from that ecosystem.

Parachains make a single trust assumption since they all rely on Polkadot’s consensus, so they trust the main relay chain. Same with Kusama and its parachains.

Thus XCMP relies on Relay Chain and parachain validators to verify messages sent from para chains, the process is finalized by a block on the relay chain.

Established channels such as with IBC. However each submission needs to go to the relay chain, and as such need to monitor state on the relay chain to confirm that messages have reached consensus. Thus, the parachain block headers go into the relay chain blocks to confirm message passing.

In this case, the Relay Chain sorta operates as the trusted third party (TTP) - so it’s as safe as the valset of the Relay Chain - your trust assumption.

Parachains derive their security through the Relay Chain (Polkadot) through shared security.

This means that the total stake of the Polkadot network is the trust assumption and cryptoeconomic security of XCMP.

Requires a light client on each other’s chain to cryptographically verify consensus state between the two chains

Requires Relayer to relay information between the light clients on the two chains. The relayers are required for liveness—the ability to be able to exchange messages among nodes, with the nodes successfully coming to a consensus.

The relayer does not relay messages to light clients on each chain. Instead, the exact recipient of messages that the relayer submits to each chain is the IBC module on that chain. Light clients are a small subcomponent of the IBC module.

IBC light clients only trust a specific header at initialization step (a root-of-trust, when the client is created). During that step, the client indeed trusts that the node which supplied the header is honest. After that step, they no longer have trust assumptions resting on specific full nodes being honest.

Trust assumption lies within the two validator sets of the connected blockchains

Cryptoeconomic security is the total stake of the chains in question

To continue on the Cosmos route let’s take a look at Gravity and Axelar which both operate their own bridges via smart contracts on non-CosmosSDK/Tendermint chains and onto their own chains that function as a trusted third party and consensus network. These are relatively simple solutions, but they do enable the Interchain ecosystem to connect to the wider blockchain ecosystem. So let’s take a look at them as well.

Runs a Cosmos chain as the consensus network which secures and mints ICS assets that are usable in the Cosmos ecosystem.

Validators are required to run a full ETH node

Connected by a relayer network (which in this case is not trustless as with IBC) which adds a trust assumption since they control their Cosmos SDK zone and as such run their own Eth nodes as previously noted.

Liquidity bridged to Gravity is in smart contracts on Ethereum.

Non-upgradeable contracts, only slight logic upgrades are possible.

The trust assumption lies with the Gravity bridge valset, smart contract security risks and relayer network that has conflicting interests.

Similarly to Gravity, it relies on validators of the Axelar chain to run nodes/light clients on the chains onboarded to Axelar.

They also batch up transactions similarly to Gravity

Relies also on smart contracts that need to be ported to the targeted language of new onboarded chains. Axelar currently supports more chains than Gravity which only services Ethereum. These smart contracts aren’t liquidity smart contract as such, but rather a smart contract that can call other contracts. This means they in theory operate like a gateway or a light node in which messages are passed that fit the logic supplied by Axelar. Bridges, like Satellite, can then use the infrastructure to set up an actual liquidity bridge and bridge assets.

Allows for general message passing cross chain and is targeting applications to implement Axelar as a messaging protocol ala what IBC is as well as what Polymer and Composable are targeting.

Allows apps to use their infrastructure. Similarly to how LayerZero is not a bridge, but Stargate is. This is a solid comparison, however, keep in mind that Axelar operates its own consensus network, which is its Cosmos SDK chain where its validators also run nodes for connected systems.

The trusted valset is currently 60 validators (although not all validators run nodes on the connected chains, for a subset of them or sometimes all). There’s obviously smart contract risk to take into consideration as well, and also if you’re using a bridge connected via their infrastructure they're smart contracts as well.

Next up I think it’s quite relevant that we take a look at LayerZero, who’s really taken the general cross-chain messaging protocol world by storm, and has seen great traction. What’s important to note here again, is that L0 is not a bridge, but rather a messaging protocol that you can build bridges with or use to call cross-chain smart contracts.

Two entities— oracle and relayer.

The oracle forwards block headers to the destination chains, while the relayer forwards the transaction proof from the source chain against the relayed info from the oracle, which attests that the transaction/message is valid

Applications are given the flexibility to use LayerZero’s default oracle and relayer or create and run their own. They’re used as a cross-chain messaging protocol, not an actual token bridge. But you can build bridges with it, such as Stargate and others, which use them for messaging.

Endpoint, which is their version of Axelar’s Gateways, or Polymer’s IBC logic smart contracts. They basically handle the verification as a light client would in IBC.

This does mean you, as with Axelar, are dependent on the LayerZero infrastructure and then the smart contracts connected to LayerZero.

If one of the two actors is dishonest the transaction will fail. However, if both are dishonest (which if you rely on centralized solutions or the application’s own could be a problem) exploitation is possible. As such decentralized oracle networks being used here are essential, also having multiple options and possibly using consolidated oracles - refer back to the oracle part further up if needed.

Doesn’t rely on a trusted third-party chain, but instead on a modular architecture that can have varying degrees of security and trust.

As safe as the oracle/relayer solution being used as well as the natural smart contract risks.

Does not provide or depend on any cryptoeconomic security per say. However, it does rely on crypto economical security of the networks used. So, the economic security of the oracle used. The security of the relayer is it a multisig etc. It also has trust assumptions in the smart contracts that are used too.

Next off let’s look at what has been coined “Optimistic Bridges” here I am particularly referring to Nomad and some of their partners. There’s obviously some connotation with this, but do remember that the exploit was not a result of the bridge mechanics, but rather a smart contract exploitation, which the security of the network failed to cover.

Optimistic Bridges

Transaction/data posted to a contract function ala Endpoints/Gateways previously mentioned.

Bridge agent signs Merkle root of data being correct and posts it. Then any relayer can post it to the wanted destination chain. This agent has to bond tokens that are slashed if fraud happens.

After data is posted a fraud-proof window is started where any watchers (incentivised since they get the agent’s bond) of the chains can prove fraud on the source chain and as such the bond is slashed. However, currently, on-chain slashing as such isn’t possible so it has to happen in an off-chain network usually half-manually.

If no fraud-proof is sent within the time frame data is considered final on the destination chain and can call the contract on the destination chain.

This technique has a low trust assumption, however, it relies heavily on smart contracts and watchers working. If there are any smart contract exploits, the watchers are unable to do anything.

They just need a single honest watcher, since only a single party needs to correctly verify updates.

This does mean that the slashable stake that is bonded needs to not be extremely high since you’re relying on the fact that just one party is honest.

Needs decentralised agents, otherwise, a single one can halt the system.

Needs tax on watchers to prevent DoS, however, this means that if there’s never any fraud you’re also not gaining anything - needs different incentivisation schemes unless you’re just relying on the actors of the bridge to run them, but that leads to centralisation as well, since both watchers/actors and the protocol would be running everything.

Cryptoeconomic security is dependent on the specific networks used. It could be the cryptoeconomic security of the watchers or agents that have bonded tokens to be allowed to participate, etc. There are various ways to limit their reach, as discussed previously.

Next off let’s cover Multi-Party Computation (MPC) bridges, some of which also rely on their own trusted third-party externally verified consensus network, such as a blockchain. Two examples of such solutions are Qredo and Chainflip. Synapse also works somewhat similarly with their leaderless MPC valset.

Multi-Party Computation (MPC) With External Validators

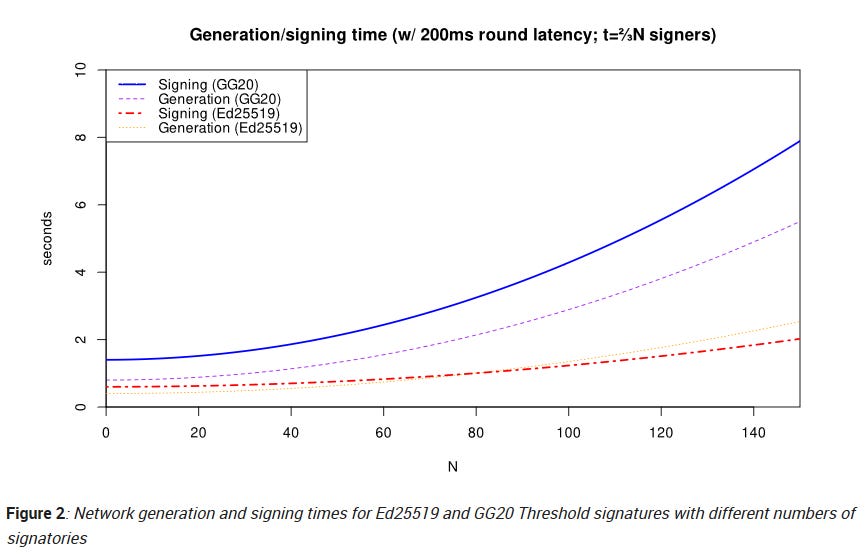

MPC is a threshold signature scheme that allows several nodes to create signatures under a single key, this makes it harder to collude and take over an account.

External validators refers to the fact that the protocol is running a network or chain with validator nodes that verifies signatures and calls and controls the gateway smart contract functions on chains that the MPC wallet wants to bridge to. This can also be referred to as a middle chain approach.

Many MPC solutions also run various hardware security.

If an unauthorized attempt is used to access accounts the keys stored within that node are destroyed, social engineering is the only way to access (which we have seen succeed before)

This also means that these bridges are often limited to a few individuals and not as widely available. Typically focused on institutional use.

Trust assumption in that the bridge gateways contracts are set properly from the protocol in use. Security risks in the various smart contracts. Social engineering is also a valid concern here.

The middle chain is often used to record the ownership of assets for users.

The gateways should be controlled by a decentralized multisig/node network for optimal security assumptions.

The middle chain in these cases often is the cryptoeconomic security for the system, since it allows to record and change who owns assets, as such it is the trusted third party.

Keep in mind that with the number of signatories used the latency of system can increase, as seen below:

The various solutions end up looking somewhat like this:

The previous two solutions can also be seen somewhat as an application-specific bridge, which is a type of chain we alluded to earlier as well. Another chain that fits into this grouping, is the one that people usually think of, Rune also known as THORChain.

Rune (THORChain)

The THORChain essentially acts as an external validator that practically operates leaderless vaults on connected chains. This also means that it is the cryptoeconomic security of the entire network, as it acts as a middleman between the chains bridged. It is also one of the trust assumptions that need to be made.

A state chain coordinates asset and exchange logic while delegating transaction output

This works with an “endpoint” on each chain connected to handle the specific transactions

Each signer is required to run a full node on connected chains

Each chain’s client is relatively lightweight and only contains the logic needed to call contracts on particular chains. The main logic is in the observer clients that operate on the THORChain itself. Let’s sketch it out so you can get a better idea:

To finish off our analysis let’s take a look at a type of bridge that’s often not referred to as a bridge, but rather an L2 rollup contract. These are the contracts on Etherum that L2s communicate with and where assets are locked and then “minted” on said rollup. These contracts hold, at this point in time, billions of assets. As such the validity of them is incredibly important. Let’s take a look at how they work, and what type of security they provide.

Rollup Bridge Contracts

Function akin to a bridge in the sense that assets are locked in an L1 smart contract, and then become available on the L2

Rollup state and proofs are verified on the L2 contract, giving cryptographic security, that is somewhat derived from the underlying layer. If a validity proof is needed, it is sent and verified in a verifier contract on the L1.

Most rollups have upgradeability in their smart contracts, which is obviously a risk that needs to be taken into consideration.

Most rollups allow transacting via the L1, or force exit to it if the sequencer fails.

Essentially a trust-minimized bridge. However, there are significant smart contract risks, as well as trust assumptions around who controls those.

Now that we’ve covered the vast majority of the type of solutions that are currently used for cross-chain messaging protocols and bridges, let’s take a look at another very important part of the security of the protocols in question. Here we are referring to the economics of the protocol. In particular of interest here is the cryptoeconomic security of the protocol, if it has a token that gives governance powers or allows you to take over a vital part of the protocol itself.

Bridge Economics

Let’s start with an assumption - There’s a bridge protocol with a governance token that allows voting for upgrades to the protocol or allows taking control over core protocol functions. Now, if the total value locked in the protocol far outweighs the quorum to pass a proposal, then there’s clear value in attacking the protocol. This simple assumption is why bridge economics and governance functions are extremely vital to bridges. One way to mitigate this in regard to governance is to utilise a slightly more nuanced governance principle called Optimistic Approval, as covered earlier.

Cryptoeconomic security is a crucial aspect of blockchain bridges and cross-chain messaging protocols, and requires a carefully designed and implemented system of incentives and disincentives to ensure the security and integrity of the system. This is why systems that don’t necessarily rely on trusted third parties’ cryptoeconomic security work best. In systems where you rely on a bridge’s cryptoeconomic security for securing assets on various chains, obviously isn’t as secure as relying on the chains’ cryptoeconomic security themselves.

However, currently, most bridges and cross-chain messaging protocols rely on the economics of a trusted third party. Obviously, this can be quite ideal in a situation where that valset is incredibly secure, but could also falter in other situations. There’s also the usage of other mechanics that can be used to secure networks that are participating, such as Verifiable Delay Function, which could add randomness to participating oracles in networks that rely on such actors.

Other economic aspects that need to be taken into account are networks where cryptoeconomic values are used often such as in multisigs, valsets, mpc or even thresholds. These often rely on an honest majority assumption, meaning that the cost to attack these bridges is the cost to corrupt/overtake 51% of the network.

Trusted Third Parties

The use of trusted third parties in blockchain bridging has both advantages and disadvantages. On the one hand, these third parties provide a centralized and trusted point of coordination, which can make it easier to manage and secure the transfer of assets and data between the different networks. This can be particularly useful in situations where the different networks have different security and consensus models, or where there is a need for additional levels of oversight and control.

On the other hand, the use of trusted third parties introduces a potential point of failure in the system. If the third party were to be compromised, it could potentially lead to the loss of assets or data. Additionally, the centralization of authority in a trusted third party can potentially undermine the decentralization and trustlessness of the system itself.

Rate Limiting

Another aspect that is also economically relevant and that could help limit the damage attack cause is the use of rate limiting for the bridges. What is meant by rate limiting is the concept of limiting the amount of total USD value that can be bridged per hour, so that emergency solutions can be put into place to save the rest of the bridge.

Obviously, this causes issues on the UX side, especially for very popular bridges or for large bridging users, while obviously adding some security. For example, you could limit the total amount that could be bridged to 10M USD every hour. The vast majority of users/retail won’t notice, and it would limit the possible damage in an exploit to an amount that is much smaller. This would obviously have been quite useful in past exploits.

Is there some magic number for what the rate limit should be? Not really, it depends on the adoption of said bridge, and it also depends on what chain is being bridged to. For example, on an Arbitrum<>ETH bridge you’d need a large amount that is possible to be bridged, as a result of its popularity. However, in the case of a gaming-specific app chain or rollup, a ton of value isn’t needed to play a game, and you’d rather ensure the security of users’ funds. In this case, rate limiting would make a lot of sense.

The negatives are obvious - throttling and pricing out big players. However, does security outweigh those?

One solution could be to implement a “highway of bridge lanes”. Different bridges for different solutions and people. One obvious downside of this is the fragmentation of liquidity and volume that this would bring. For a gaming-specific rollup it makes sense to rate limit lower amounts, you won’t need big amounts there, and you want to ensure the security of your user’s funds under any circumstance.

Some other things to keep in mind are:

DoS attacks, so you’d need to implement considerable fees on big volumes.

You want to optimise for growth, but this puts a slight stopper on growth.

As has been clear throughout this article, it’s trade-offs all the way down. So this is also the case when it comes to rate limiting. However, it is an area we would love to see more experimentation in. In most of these cases there is no clear optimum, but in general, trying out new and daring things in this space is worth doing. No final design is ideal by any measure, but safety and security should be the biggest concern for any protocol. You could say rate limiting allows us to sacrifice UX for the few and provide security for the many.

Interoperability and Composability

Interoperability is often used to explain the seamless transfer of assets between applications and chains, while composability is used to describe the idea of a shared infrastructure between those specific applications, such as deploying an application on several chains with minimum work. This is the type of work being done by previously described cross-chain messaging protocols.

Composability is really only sustainable if transaction costs are low between chains and applications, otherwise, it loses the principal value that composability grants you. Composability leads to more choices for the end-user and the ability to seamlessly do actions across various ecosystems and applications while removing prior obstacles such as building ecosystems from scratch.

Applications become composable when they're able to interact with other applications such as automated liquidity positions, lending etc.

We’ve tried to be as objective as possible and provide a clear overview of the current landscape in regard to cross-chain interoperability, and hope that it provided some sort of value to you as a reader. If there’s any point that you disagree with or feel was misrepresented, then do reach out to the writer @0xrainandcoffee on Twitter.

Thanks to Runtime Verification for input on the smart contract part, as well as to the other readers of the article, who have helped.

For further reading of trust minimisation in bridging, I highly recommend reading the Clusters piece by Mustafa from Celestia - Link