Commoditising Your Complements With Modular Architecture

Commoditising application complements while retaining control

If you'd like to spread the word about this post, feel free to interact with this post: Twitter

In our last article, we primarily focused on the concept of transaction ordering and how it can be used to an application's advantage through customisation and specialisation. In this article, we will start to touch more upon some of the other possible avenues you can go down to optimise your environment.

However, before we start digging too deep into the various specialisations and customisations that open up, we find it pivotal that we explain part of the microeconomic reasoning behind doing so. Here, we are referring to the idea of commoditising your complements and bringing value back to the protocol and its users.

The wider crypto community has long had a complements problem. The vast majority of products, infrastructure and security derivable haven't been commoditised, and building applications with more control is expensive. This is changing.

The idea of commoditising your complements was widely popularized as a concept back in 2002 by Joel Spolsky (Read the entire piece here if you're interested). The core goal of it is to increase profitability, innovation and user acquisition. This is a result of commoditising your complements (the things your applications rely on) e.g. API costs, hardware, and user acquisition (for example, offering some free software that gets you into their ecosystem) which can also lead to success for things you're either reliant on or directly involved with.

What we're starting to see happen for several different parts of the modular stack is that there are several competitors for every single service. This also means that competitors now have to start to differentiate, be cheaper, white glove services or give out grants to attract new developers. This is a vicious cycle as you're forced to give away significant incentives or time. One method to attract developers in this case could be to develop open-source software that is competitive (and cheaper/free) that acts as a funnel towards the project. A good example of this is the creation of various SDKs such as Rollkit. We are starting to see now that data availability, to some extent, is commoditized (there are obviously degrees of quality to that blockspace which we won't get into right now). This commoditization also means we're seeing a large influx of rollups of all shapes and kinds being developed. This "commoditization" of blockspace (specifically data availability, but we expect to see this happening with other parts of the modular stack as well) led by lower prices, has increased demand.

Another example of this we like to use is sequencing, and how that relates to Rollups-as-a-Service (RaaS). As mentioned previously, we see shared sequencers as the premier RaaS providers of the future. They're able to provide the service much cheaper with the added caveat of composability. Beyond that, it also makes it a lot easier for the RaaS provider (the shared sequencer), in this case, to monetise via economies of scale. Shared sequencing and those aforementioned economics can be compared to some extent to the way Visa operates. It is essentially a volume-driven business with relatively thin margins on a per rollup business (although you're able to take a sequencing fee, and perhaps some notion of MEV). Visa's margin is quite thin, yet its revenues are substantial. If we could make a prediction of where we see the vast majority of RaaS customers going (beyond the ones needing very specific, white glove services), we foresee them going to shared sequencers such as Astria, Espresso, Radius and Madara.

Beyond lowering the cost of your complements, commoditising your complements also ties into the idea of growing the market around you (the things you are dependent on). This will lead to creating value for yourself; while creating moats and monopolistic tendencies around your product. We especially see this in Web2 with open-source software, though this is likewise becoming a trend in Web3. This could also be something as simple as a Tesla wanting to lower the cost of making batteries since they're dependent on them in their cars. Or, in blockchain terms, a chain wanting to lower the cost of key aspects of it; such as having low-cost hardware sample data availability (e.g. Celestia)

On the topic of "growing the market" around you and, as such, growing yourself, one "crypto-native" idea of growing the surrounding market we see is airdropping to the protocols you are utilising in your setup. This is likely to grow the user base of the underlying and direct users, narrative, and attention towards your product. After all, at the end of the day, one of the most powerful aspects of blockspace is its alignment. Although, realistically (in a perfect market), it's based on quality (deterministic, block times), ease of verifiability, liquidity on top of it, cost etc.

Inefficient systems, high costs, and the inability to customise applications stiffen innovation. Commodification removes hindrances to innovation and allows developers to think outside the box, as they're not held back by costs and shackled to a set rulebook. Crypto is more BD/attention-driven at this point, but as the industry matures, this becomes more vital.

This can also be applied to how we build applications on/with blockchains. I started touting this idea during and before ETHDenver, starting with the idea of controlling ordering (while retaining security) as an application - and how this can help monetisation, customisation, and valuations. We think a few general examples will help explain how and where you can start to see this commodification happening and how you can take advantage of it.

Data Availability (Celestia, Avail, EigenDA)

Rollup SDKs (Sovereign, RollKit, OPStack, ZKStack)

Shared Sequencing (Astria, Espresso, Superchain etc)

MEV-alignment + Auctions (SUAVE, Intents)

Economic Security (ICS, Eigenlayer, Babylon)

Solvers (Outsource execution and pricing)

VMs (zkVMs)

Now keep in mind some of these have been commoditized already to a certain extent, while others are still to be. Some (like solvers) are less likely to be and are instead forced to be incentivised to present the best possible outcomes. Although, there's a case to be made that solvers are likely to become commoditised (as market makers have been) over time - although, as with searching, there are always ways to improve (but less "clear" in a perfect world).

Specifically, regarding the latter commodification of zkVMs and its effect on the surrounding complements (such as rollups), we're seeing teams build generalised zkVMs rather than custom circuits. This is becoming possible by running the rollups' State Transition Function (STF) in Rust through compatible generalised zkVMs such as Risc Zero and Succinct. While a fully-fledged custom circuit zkVM for a certain blockchain VM is definitely more optimised, generalisable ones are catching up. This also means less maintenance and upgrading as the VM in question progresses. Sovereign Labs (one of the SDKs mentioned above) is going down this route. This provides a large degree of customisation on the application logic (such as custom/specific changes) while retaining the scalability and security of ZK. Another important point to add is also on the ZK acceleration part of things. Optimising software and hardware acceleration for specific schemes, algorithms and VMs is much easier for a generalisable VM than for specific use-cases where a custom circuit setup is used. Integration efforts are also likely to be much easier, and more prudent for hardware acceleration companies since there would be a larger possible customer base to tap into. This is something we're already seeing with companies like Ingonyama where integrations with specific highly utilised products are prioritised. This means as a user of a generalisable zkVM, your "customer support" is generally going to be much better.

While many of these zkVMs are providing it open-source, one might ask where the monetisation comes from. One thing that has become quite clear is that, especially in web3, monetising software (specifically open-source) is quite difficult (as covered here). However, as explained earlier in the commodification of complements, providing open-source software by for-profit companies is not a new idea; rather, it is used as a funnel. Risc Zero is a great example of this, utilising their open-source zkVM to funnel developers into their ecosystem, who are all potential customers of "monetisable offerings" such as Bonsai. This is a trend we're likely to see also happen with Succinct, Modulus and other ZK companies.

The baseline is that as the prices of a product's complements decrease, the demand for the product increases. So, if an Ethereum with higher gas limits and block sizes, alongside pruning and keeping active state on disk, in memory, would decrease the cost of using Ethereum - the usage of Ethereum would, in turn, increase. And the greater the demand, the larger the profits.

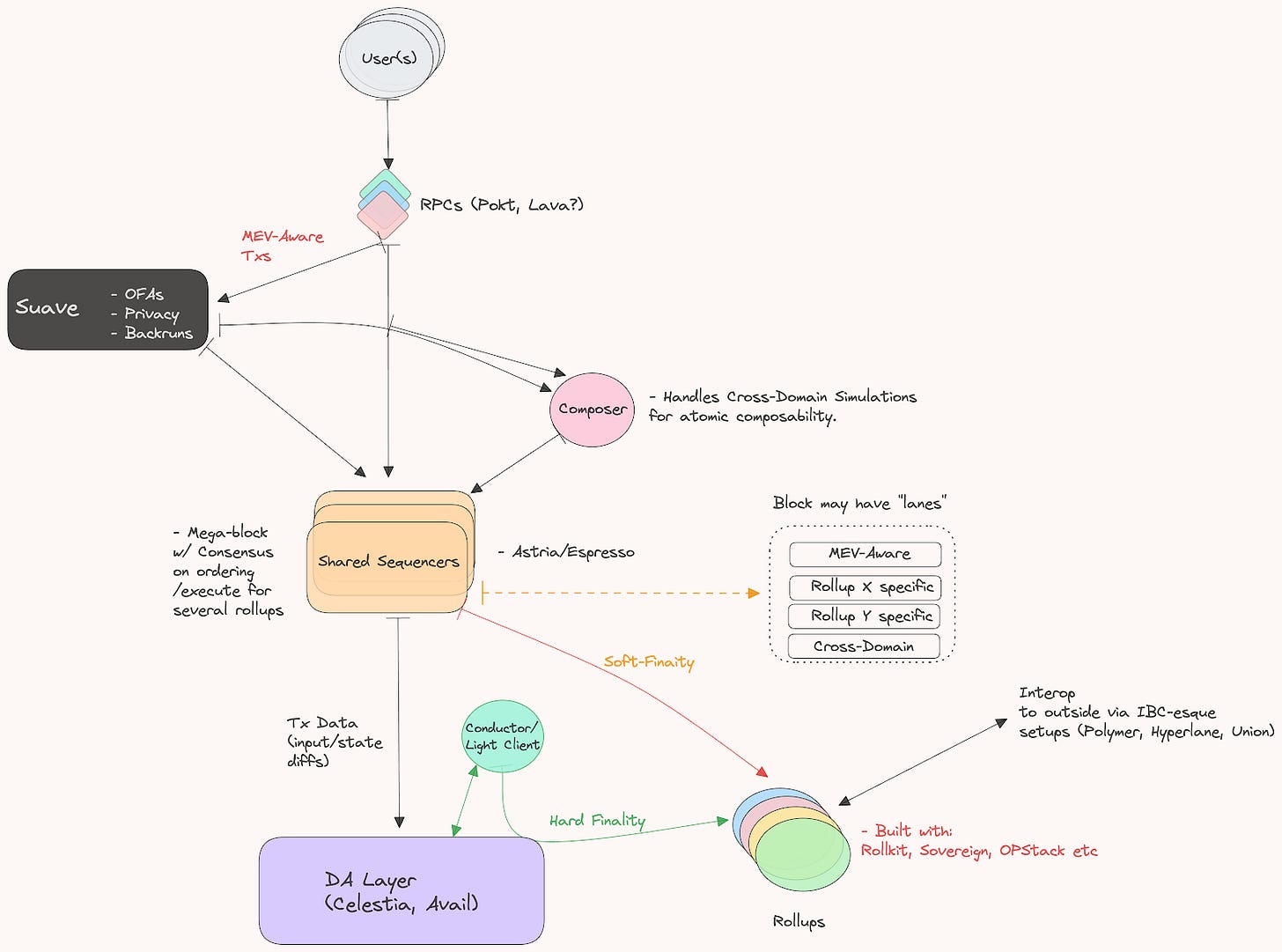

To give a clearer overview of how we're looking at utilising commoditised complements within the modular stack, let's look at how this could potentially look for an application developer looking to build an application-specific rollup to take advantage of customisation. It is important to note that to an end user, this optionality in design choices will all be abstracted away. Essentially, the end goal is to provide web2 UX but with web3 security.

In this case, an application built as infrastructure (rollup) has outsourced much of the work to its complements and is instead focused on providing the best possible product and user experience without having to deal with orchestrating everything that makes an efficient blockchain, efficient.

What this also signals is that the ever-famous API Economy of web2 seems to be coming to crypto as well, slowly, but surely.

Now, when you open up and commoditize the complements you surround yourself with, you can also customise and specialise your specific infrastructure and environment to your application and, therefore, the end consumer's needs. This is specifically something we covered during our last piece on ordering. However, there are other important aspects and needs of developers that go beyond that. One point that is important to get across is that offering choice is not a bad thing. Choices and free permissionless markets are in our opinion extremely vital for innovation. Ultimately, at the end of the day, customers will, through market forces, settle on some number of products that they deem viable.

A few examples of this are (beyond the fair ordering, verifiable sequencing rules and MEV-extraction ideas that we presented in our previous article):

Resource Pricing Efficiency (beyond the fees paid for complements)

Warehousing of blockspace

Custom OPCodes, Precomplies, Curves (e.g. Aligned-esque setup)

Alternative VMs

Gaslimits & block times

State DB/Merklelisation optimisations - hardware changes

Formal Verification

Monitoring & Response w/ Fraud Proofs and Assertions on an ordering level - there's an entire discussion to be had here on "good" censorship as well.

Oracle Extractable Value (OEV) w/ Protocol-Owned-Oracles (BBOX/Warlock). Providing this with high degree of "security" can be done on a rollup level much easier.

One very important fact to note is that the commodification of complements is not always used in a collaborative or "friendly" way but rather as a way to make competitors irrelevant. These forrays can be used as attempts to commodity another project elsewhere in the stack, or even defend against others doing the same. This is also visible within the crypto VC space as well, for their portfolio companies (whether unintentional or not is a different discussion); such as the high rate of releasing open-source software that directly competes with others (and helps portcos).

Crypto applications have for long also been either prone or likely to participate in vampire attacks on protocols that might have common interests with one another. While in theory, if either side grows, they all grow (and their complements with them). For example, AVSs grow if Eigenlayer grows. Rollups on Celestia grow if Celestia grows. Ethereum grows if Ethereum rollups grow. Infinite games as the Eigenlayer community likes to put it. Although there are some nuances here. For example, a larger number of AVSs utilising the same compounded economic security, the higher the slashing risk (and social layer fork chances), and the more of the same competitor - the less revenue goes to each. In some cases it also depends on the layer you're deriving from. E.g. in a settlement layers case (where execution and liquidity also exist on the baselayer) rollups could be seen as parasitic. For a DA layer, it is its sole purpose to provide a service to rollups, and the more, the merrier.

As we mentioned earlier, there are ways for applications to gain customizability and control; however, it is often at the expense of security. The reasoning for building these applications, as infrastructure - is that it allows us to retain the aforementioned powers while deriving security from elsewhere. Now, there are several ways in which this can be done, let's cover some of them:

1. DA (last bastion of truth)

2. Settlement (censorship resistance of dispute resolution)

3. Consensus (shared security model)

We made an argument during our "Building Applications with Modular Infrastructure" presentations that The Third Time is Not the Charm - Why is this?

First of all, there’s added cost to crypto economic providers (the operators running new state machines and runtimes). Furthermore, instead of paying some DA or Settlement fee, you have to pay for this received economic security either via some % of fees (which might not even cover the operating cost or pay enough of an APR to warrant providing economic security). The other, and less ideal possibility is that you pay some % of token incentives (but then you're adding consistent sell pressure to your native token). This is also one of the downsides of running a full-fledged app chain as a smaller application, beyond the orchestration task.

Launching app chains is generally reserved for larger applications that want no dependencies, are okay with the orchestration overhead and are able to pay a large set of infrastructure providers for services. Both the incentives in the shared security and app-chain model get worse, specifically for applications with LPs, who have to pay some set yield to attract liquidity providers and offset Loss-Versus-Rebalancing (LVR) to some extent (although there is some very interesting LVR research and implementation work being done by several teams, such as Sorella Labs). Take the case of Osmosis, for example, they're paying emissions to stakers and infra providers, while also paying out rewards to LPs (double dipping on emissions) - 10,21% inflation and around $10M to LPs a year.

In the shared security cases, there's also the possibility that you've opted in to provide some set amount of security (that might not be available in the long run, in the case of airdrop hunters, so retention is super important in this model as well) that you might not be able to opt out of in the case where the receiver is now losing the operator money on hardware and DevOps.

Then there's also the added slashing conditions (risk) that are done via social slashing for most of the shared security models. This results from operators and restakers being opinionated on the outcomes of the state machines they're providing security to. In the case of just inheriting data availability security, this is not the case as data availability providers are unopinionated on the data they receive, their job is just to provide fast and deterministic proofs of this data having been made available. I.e. the validators and light nodes on a DA layer like Celestia are not running full nodes on supported rollups. We make the case that experimentation, customisation and specialisation are also possible while inheriting the last bastion of all truth for blockchains - data availability, from which all state derives. You essentially just pay the (now commoditised) DA fee, execution and state storage costs - while allowing applications to take significant overhead profits without having to incentives with their native token.

However, these shared security models allow some very interesting, original and unique setups to experiment with large amounts of security. Nevertheless, these systems end up looking like singular chains/state machines as operators are needed to run opinionated runtimes for every single network they support - losing significant scalability. However, some of this is offset by the fact that you can indefinitely compound this cryptoeconomic security, at the expense of significant risk - especially in the case of large slashing events that could have large second-order effects on the pegs of the various protocols built on top.

Many applications want the power of an app chain but cannot afford the security budget or deal with the orchestration associated with it. With the extensive number of available DA, settlement, and shared security protocols, launching modular app chains is becoming readily available at a fraction of the cost.

While the execution layer in these cases is quite centralised (depending on the utilisation of a decentralised shared sequencer or not), we do expect to see small sets for some liveness protection (although client diversity here would also be interesting to see). However, in the cases of a settlement layer underneath forced exit withdrawals are possible on L1 native assets. The smaller sets of sequencers (validators) also mean a much lower hardware cost (while retaining security). This can allow for some extreme levels of specialisation (i.e. MegaETH and Eclipse). Seeing this execution layer focus on performance (both on a VM and DB level) is extremely promising since giving up performance does mean giving up certain use cases (and, as such, users).

As we’ve alluded to throughout the article, while distributed and decentralized systems relying on consumer hardware for security and verifiability is a thing of beauty - they’re not very efficient for scalability. Although performance suffers, it can be used to build certain efficient services (such as data availability sampling), which most rollup-centric layers seem to be going down. By deriving security from these layers (specifically the last bastion of security, DA), we can optimise performance and even remove “throttles” like consensus from the layers where execution and merklelisation happen. We’re seeing this happen with rollups building with extreme optimisations and hardware requirements on top of extremely decentralized baselayers and networks from where they derive security. At the same time, they’re able to retain control, lower cost, and stay “secure” (there’s a lot of nuance here, as is hopefully clear by now.

For example, instead of running 1000s of highly optimised, expensive bare metal hardware - you could run a set of sequencers (for liveness) that outsource DA/Consensus to an efficient layer for a fee (rather than inflation to infrastructure providers). A good example of this is Eclipse:

We’re also seeing this with applications or communities that don’t want to deal with the orchestration of hardware and optimisations that rollups require (either on the SDK or sequencing level) and outsource these at the cost of some DA and sequencing cost (while retaining some control with integrations to SUAVE-like setups). This can be done by utilising Astria and Sovereign Labs, which can handle most of the heavy lifting while providing a very efficient setup.

The only costs beyond this are the RPC and Archive nodes that need to be in place (but even these are being commoditised).

As profit margins get lower as users and applications demand lower costs, especially in the chase to support more and more users - so does the need to lower the associated costs with running infrastructure, especially if we aim not to inflate the native token indefinitely to support providers (price appreciation helps here, but there’s significant sell pressure as well)

What we’re also noticing is a focus on providing not just security in terms of the back-end, but also for front-facing developers via ecosystems/generalised rollups that see the need/want for more "secure" execution environments while tapping into hotbeds of liquidity. This is especially important with more "worry" that many larger funds have with participating in DeFi because of the inherent risks (reentry attacks etc). A good example of this is Movement Labs.

While there are some issues around application composability (when not in a shared sequencer environment with simulations), this is not the focus of this article - and we’ll be covering these issues (and solutions) for them later on. Specifically, inter-block between apps, but even two blocks, which is beyond what is possible with market makers/solvers and intents with bridging. We’re talking about building apps that control their own environment while being atomically composable with other applications in the delta. For this, you’d need a global succinct state layer with composers.

If you’re building applications that take advantage of modular infrastructure and its powers, feel free to reach out to us. We’d love to talk!